Revisiting the Idea of the "False Positive"

Blog Article Published: 08/30/2022

Originally published by Gigamon here.

Originally published by Gigamon here.

Written by Joe Slowik, Principal Security Engineer, Applied Threat Research, Gigamon.

Background

One common refrain in security circles is the chore related to “false positive” alerts and detection results. The “false positive” alert correlates with security operations center (SOC) inefficiency and even SOC analyst burnout, making it an item of significant concern for managers and leaders. Yet such discussions hinge on a very specific — and arguably incorrect — understanding of what a “false positive” event truly means.

While a retreat to formal definitions in the face of very real problems may appear pedantic, in this case, significant nuance and understanding are lost through a misinformed perspective of what a false positive alert actually means. In this blog, we will dive into the idea of the false positive more thoroughly to arrive at a rigorous definition of what this truly refers to and how this improved understanding can fuel better management and comprehension of security operations.

Defining “False Positive”

The ideas of false positives (and false negatives) have their origins in statistical hypothesis testing. In this framework, an analyst performs rigorous evaluation of available data in connection with a quantifiable, measurable hypothesis to determine whether a proposed relationship exists in observed data. Through this work, the analyst seeks to disprove that no relationship exists in observations (commonly referred to as the null hypothesis) in order to support the desired alternative hypothesis put forth to explain given data and the proposed relationship. For security operations, this relationship holds the same overall direction but typically is thought of as assuming some relationship exists first (i.e., the alert or detection is true), with the burden then placed on proving the existence of the relationship given available evidence.

The above process yields a matrix of possible outcomes depending on the results of the underlying statistical testing:

|

Null hypothesis is: |

True |

False |

|

Rejected |

Type I error – False positive |

True positive – Relationship exists |

|

Accepted |

True negative – No relationship exists |

Type II Error – False negative |

The significance of the above is developing a rigorous manner of evaluating observations. A false positive, strictly speaking, represents an instance where the null hypothesis is true (i.e., the proposed relationship or observation does not hold), but we incorrectly reject it (i.e., accept that the relationship does in fact exist). In security operations, this would mean we postulate a relationship (given network traffic is associated with a certain behavior) when that relationship is not present (the targeted behavior is not actually present). A false negative, on the other hand, is an instance where the null hypothesis is false, but we incorrectly accept it as true. For security operations, this would mean we postulate a relationship or behavior but incorrectly find that no such behavior exists due to some error in visibility or judgment.

Put simply:

- A false positive means we improperly accept an outcome as valid when the underlying criteria are not

- A false negative aligns with the opposite, where we accept the lack of a relationship or outcome when it is in fact not the case

The implications of these circumstances in decision-making are quite significant: In both cases, our hypothesized relationship does not hold based on actual events. Continuing to act as though these assumptions are true therefore represents a mismatch between expectation and reality, placing organizations at risk. In the case of false positives, we react to situations that do not actually exist, while in false negatives, we fail to realize a relationship (and a potential threat behavior) is true. Both circumstances have significant repercussions for security operations, as discussed in the next section.

False Positives in Security Operations

Colloquially, a false positive security event is when a given detection or alert fires, but the corresponding event is not malicious. For example, a detection looking for enumeration of accounts in Active Directory may fire during a normal synchronization among domain controllers in a Windows network environment. When analyzing this event, SOC personnel or other responders will almost always mark this instance of benign activity triggering a security event as a false positive — yet this is not strictly the case.

The activity sought in the detection identified in the previous paragraph looks for signs of a behavior that can be associated with malicious activity (environment survey prior to lateral movement). In this case, our hypothesis relates to the presence or absence of a given behavior. Critically, such activity can take place in known-good, expected actions as well as potentially malicious circumstances. As such, the detection firing when the activity specifically sought takes place represents a true positive in terms of the detection’s performance and accuracy. What distinguishes this item as potentially malicious is the context around which the item took place. In our hypothetical, the AD enumeration activity relates to expected DC synchronization behaviors — but if our analysis of why the activity took place indicated other, malicious reasons, we could identify an intrusion in a relatively early stage of network and account survey.

An actual false positive relating to the above activity would be if a detection fired for AD enumeration behaviors, but subsequent investigation indicated that such behavior did not actually take place. In this scenario, we reveal a detection engineering failure, requiring revision to our criteria that produced the incorrect alert. The opposite of this situation would be AD enumeration activity — whether malicious or otherwise — taking place, but the underlying detection not firing, also known as a false negative event.

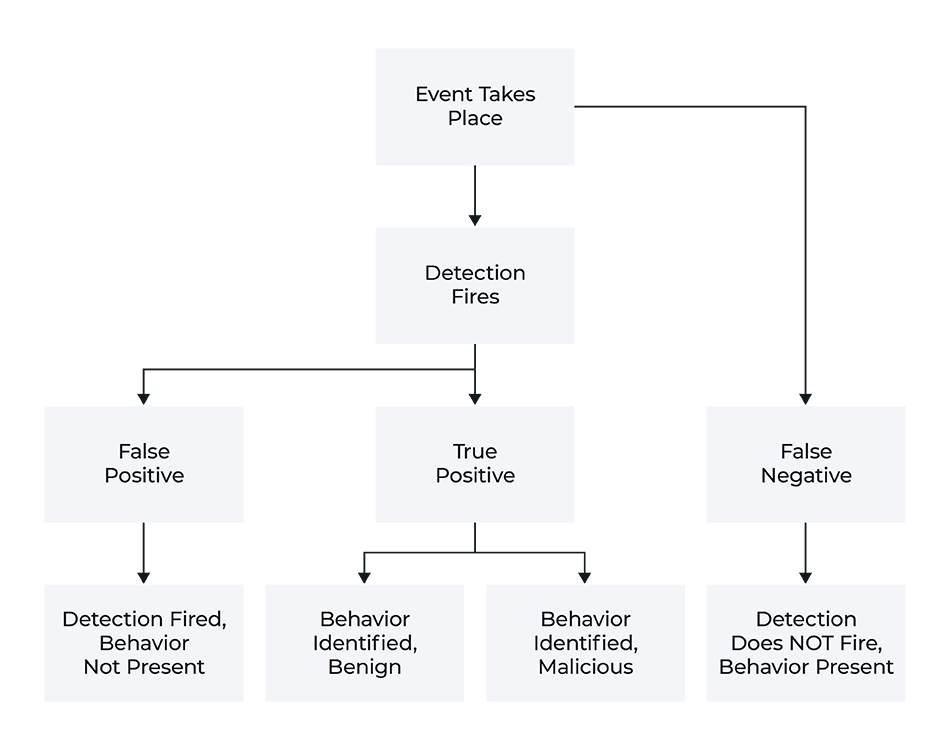

As illustrated in the figure above, there are multiple routes from “event occurs” through “detection identifies event” to “event becomes a security concern.” Understanding these routes — from those requiring no (immediate) security response to those that avoid security scrutiny — is key to understanding the implications behind false positives and false negatives. The next section addresses this concern and how a detailed understanding of false positives and false negatives, accurately construed, impacts security operations.

Implications of Rigorous Definition of False Positive to Security

Adopting a more accurate and thorough understanding of false positive events highlights that the issue around such events does not reside in the alert or detection itself, but rather in the hypothesis underlying the detection logic. Using the same AD enumeration example as in the previous section, a “high number of (presumed) false positives” around the event due to alerting on benign instances of the activity is not a failing in the detection logic — rather, it would appear to be working as designed, firing on even benign instances of the underlying activity. Instead, the issue is in the motivating hypothesis of the detection, that such activity (and the gathered context around it) is highly correlated with malicious events.

Logically speaking, our detection in this simple example is valid, but if the preponderance of detection instances is not of malicious origin, we may have logic that is unsound. The underlying hypothesis and related observations marking malicious activity may thus require revision. For example, modifying the AD enumeration detection to ignore activity taking place between known or likely legitimate domain controllers could refine the detection to eliminate one source of potential unsound alerts.

However, if we simply ignored or removed the detection for this activity because of a perception of high numbers of “false positives,” we would remove a potentially powerful tool to analyze our network environment and ignore the appropriate troubleshooting steps to improve the deployed detection. In this case, we would migrate from a high number of superficial “false positives” and instead arrive in circumstances where we (implicitly) accept multiple false negative results. This situation, especially when translated into security operations where a false negative event means an alert does not fire, translates into a lack of awareness around events taking place in the environment. When this situation arises out of alert “tuning,” practitioners essentially remove visibility into a behavior of interest.

Conclusion

False positives represent a real concern in security operations and detection engineering, but appropriately dealing with such items requires an understanding of precisely what this term means. Failure to appreciate how and why events trigger in detection development and incident response means not only a weakened security posture, but the potential loss of situational awareness and visibility linked to acceptance of an increasing rate of false negative events. By adopting a more rigorous understanding of detection function and performance assessment, security leaders and decision-makers can better equip themselves to properly adjust and improve the performance of security alerting mechanisms within their respective environments.

Trending This Week

#1 The 5 SOC 2 Trust Services Criteria Explained

#2 What You Need to Know About the Daixin Team Ransomware Group

#3 Mitigating Security Risks in Retrieval Augmented Generation (RAG) LLM Applications

#4 Cybersecurity 101: 10 Types of Cyber Attacks to Know

#5 Detecting and Mitigating NTLM Relay Attacks Targeting Microsoft Domain Controllers

Related Articles:

Why Business Risk Should be Your Guiding North Star for Remediation

Published: 04/25/2024

How to Prepare Your Workforce to Secure Your Cloud Infrastructure with Zero Trust

Published: 04/24/2024

Neutralizing the Threat with Cloud Remediation

Published: 04/23/2024

Breach Debrief: The Fake Slackbot

Published: 04/22/2024