Resource Constraints in Kubernetes and Security

Published 05/06/2024

A Practical Guide

Originally published by Sysdig.

Written by Nigel Douglas.

The Sysdig 2024 Cloud‑Native Security and Usage Report highlights the evolving threat landscape, but more importantly, as the adoption of cloud-native technologies such as container and Kubernetes continue to increase, not all organizations are following best practices. This is ultimately handing attackers an advantage when it comes to exploiting containers for resource utilization in operations such as Kubernetes.

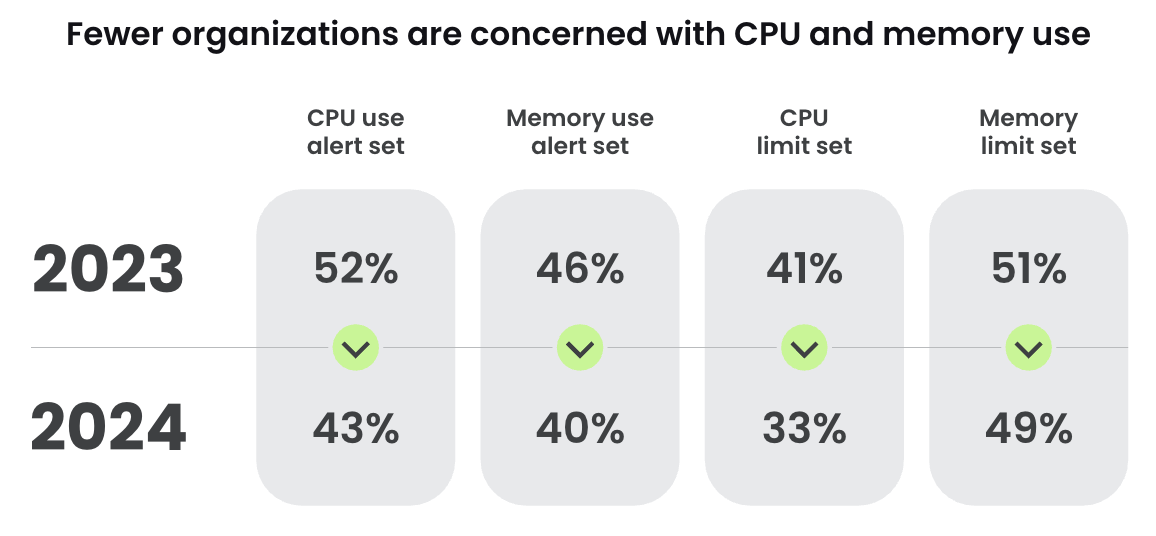

Balancing resource management with security is not just a technical challenge, but also a strategic imperative. Surprisingly, Sysdig’s latest research report identified less than half of Kubernetes environments have alerts for CPU and memory usage, and the majority lack maximum limits on these resources. This trend isn’t just about overlooking a security practice; it’s a reflection of prioritizing availability and development agility over potential security risks.

The security risks of unchecked resources

Unlimited resource allocation in Kubernetes pods presents a golden opportunity for attackers. Without constraints, malicious entities can exploit your environment, launching cryptojacking attacks or initiating lateral movements to target other systems within your network. The absence of resource limits not only escalates security risks but can also lead to substantial financial losses due to unchecked resource consumption by these attackers.

A cost-effective security strategy

In the current economic landscape, where every penny counts, understanding and managing resource usage is as much a financial strategy as it is a security one. By identifying and reducing unnecessary resource consumption, organizations can achieve significant cost savings – a crucial aspect in both cloud and container environments.

Enforcing resource constraints in Kubernetes

Implementing resource constraints in Kubernetes is straightforward yet impactful. To apply resource constraints to an example atomicred tool deployment in Kubernetes, users can simply modify their deployment manifest to include resources requests and limits.

Here’s how the Kubernetes project recommends enforcing those changes:

<code>kubectl apply -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: atomicred

namespace: atomic-red

labels:

app: atomicred

spec:

replicas: <span class="hljs-number">1</span>

selector:

matchLabels:

app: atomicred

template:

metadata:

labels:

app: atomicred

spec:

containers:

- name: atomicred

image: issif/atomic-red:latest

imagePullPolicy: <span class="hljs-string">"IfNotPresent"</span>

command: [<span class="hljs-string">"sleep"</span>,

<span class="hljs-string">"3560d"</span>]

securityContext:

privileged: true

resources:

requests:

memory: <span class="hljs-string">"64Mi"</span>

cpu: <span class="hljs-string">"250m"</span>

limits:

memory: <span class="hljs-string">"128Mi"</span>

cpu: <span class="hljs-string">"500m"</span>

nodeSelector:

kubernetes.io/os: linux</code>In this manifest, we set both requests and limits for CPU and memory as follows:

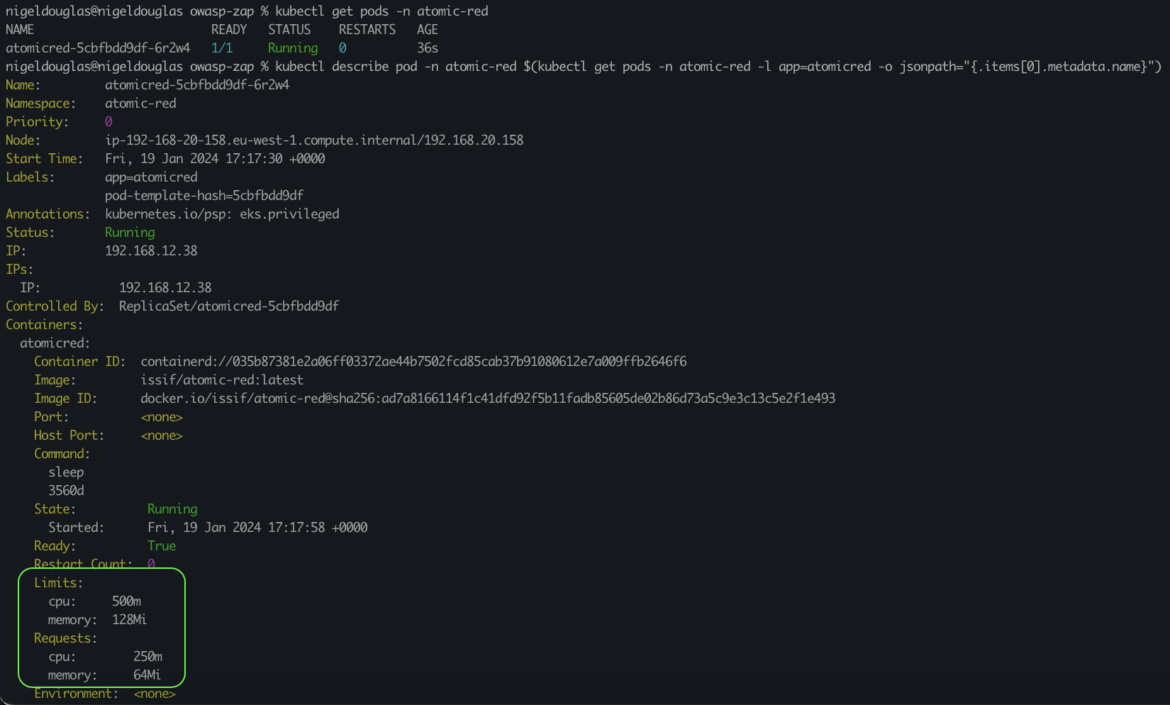

requests: Amount of CPU and memory that Kubernetes will guarantee for the container. In this case, 64Mi of memory and 250m CPU (where 1000m equals 1 CPU core).limits: The maximum amount of CPU and memory the container is allowed to use.

If the container tries to exceed these limits, it will be throttled (CPU) or killed and possibly restarted (memory). Here, it’s set to 128Mi of memory and 500m CPU.

This setup ensures that the atomicred tool is allocated enough resources to function efficiently while preventing it from consuming excessive resources that could impact other processes in your Kubernetes cluster. Those request constraints guarantee that the container gets at least the specified resources, while limits ensure it never goes beyond the defined ceiling. This setup not only optimizes resource utilization but also guards against resource depletion attacks.

Monitoring resource constraints in Kubernetes

To check the resource constraints of a running pod in Kubernetes, use the kubectl describe command. The command provided will automatically describe the first pod in the atomic-red namespace with the label app=atomicred.

<code>kubectl describe pod -n atomic-red $(kubectl get pods -n atomic-red -l app=atomicred

-o jsonpath=<span class="hljs-string">"{.items[0].metadata.name}"</span>)</code>

<small><span class="shcb-language__paren"></span></small>

What happens if we abuse these limits?

To test CPU and memory limits, you can run a container that deliberately tries to consume more resources than allowed by its limits. However, this can be a bit complex:

- CPU: If a container attempts to use more CPU resources than its limit, Kubernetes will throttle the CPU usage of the container. This means the container won’t be terminated but will run slower.

- Memory: If a container tries to use more memory than its limit, it will be terminated by Kubernetes once it exceeds the limit. This is known as an Out Of Memory (OOM) kill.

Creating a stress test container

You can create a new deployment that intentionally stresses the resources.

For example, you can use a tool like stress to consume CPU and memory deliberately:

<code>kubectl apply -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: resource-stress-test

namespace: atomic-red

spec:

replicas: <span class="hljs-number">1</span>

selector:

matchLabels:

app: resource-stress-test

template:

metadata:

labels:

app: resource-stress-test

spec:

containers:

- name: stress

image: polinux/stress

resources:

limits:

memory: <span class="hljs-string">"128Mi"</span>

cpu: <span class="hljs-string">"500m"</span>

command: [<span class="hljs-string">"stress"</span>]

args: [<span class="hljs-string">"--vm"</span>, <span class="hljs-string">"1"</span>,

<span class="hljs-string">"--vm-bytes"</span>, <span class="hljs-string">"150M"</span>,

<span class="hljs-string">"--vm-hang"</span>, <span class="hljs-string">"1"</span>]

</code><small><span class="shcb-language__paren"></span></small>The deployment specification defines a single container using the image polinux/stress, which is an image commonly used for generating workload and stress testing. Under the resources section, we define the resource requirements and limits for the container. We are requesting 150Mi of memory but the maximum threshold for memory is fixed at a 128Mi limit.

A command is run inside the container to tell K8s to create a virtual workload of 150 MB and hang for one second. This is a common way to perform stress testing with this container image.

As you can see from the below screenshot, the OOMKilled output appears. This means that the container will be killed due to being out of memory. If an attacker was running a cryptomining binary within the pod at the time of OOMKilled action, they would be kicked out, the pod would go back to its original state (effectively removing any instance of the mining binary), and the pod would be recreated.

A commitment to open source

The lack of enforced resource constraints in Kubernetes in numerous organizations underscores a critical gap in current security frameworks, highlighting the urgent need for increased awareness. In response, we contributed our findings to the OWASP Top 10 framework for Kubernetes, addressing what was undeniably an example of insecure workload configuration. Our contribution, recognized for its value, was duly incorporated into the framework. Leveraging the inherently open source nature of the OWASP framework, we submitted a Pull Request (PR) on GitHub, proposing this novel enhancement. This act of contributing to established security awareness frameworks not only bolsters cloud-native security but also enhances its transparency, marking a pivotal step towards a more secure and aware cloud-native ecosystem.

Bridging Security and Scalability

The perceived complexity of maintaining, monitoring, and modifying resource constraints can often deter organizations from implementing these critical security measures. Given the dynamic nature of development environments, where application needs can fluctuate based on demand, feature rollouts, and scalability requirements, it’s understandable why teams might view resource limits as a potential barrier to agility. However, this perspective overlooks the inherent flexibility of Kubernetes’ resource management capabilities, and more importantly, the critical role of cross-functional communication in optimizing these settings for both security and performance.

The art of flexible constraints

Kubernetes offers a sophisticated model for managing resource constraints that does not inherently stifle application growth or operational flexibility. Through the use of requests and limits, Kubernetes allows for the specification of minimum resources guaranteed for a container (requests) and a maximum cap (limits) that a container cannot exceed. This model provides a framework within which applications can operate efficiently, scaling within predefined bounds that ensure security without compromising on performance.

The key to leveraging this model effectively lies in adopting a continuous evaluation and adjustment approach. Regularly reviewing resource utilization metrics can provide valuable insights into how applications are performing against their allocated resources, identifying opportunities to adjust constraints to better align with actual needs. This iterative process ensures that resource limits remain relevant, supportive of application demands, and protective against security vulnerabilities.

Fostering open communication lines

At the core of successfully implementing flexible resource constraints is the collaboration between development, operations, and security teams. Open lines of communication are essential for understanding application requirements, sharing insights on potential security implications of configuration changes, and making informed decisions on resource allocation.

Encouraging a culture of transparency and collaboration can demystify the process of adjusting resource limits, making it a routine part of the development lifecycle rather than a daunting task. Regular cross-functional meetings, shared dashboards of resource utilization and performance metrics, and a unified approach to incident response can foster a more integrated team dynamic.

Simplifying maintenance, monitoring, and modification

With the right tools and practices in place, resource management can be streamlined and integrated into the existing development workflow. Automation tools can simplify the deployment and update of resource constraints, while monitoring solutions can provide real-time visibility into resource utilization and performance.

Training and empowerment, coupled with clear guidelines and easy-to-use tools, can make adjusting resource constraints a straightforward task that supports both security posture and operational agility.

Conclusion

Setting resource limits in Kubernetes transcends being a mere security measure; it’s a pivotal strategy that harmoniously balances operational efficiency with robust security. This practice gains even more significance in the light of evolving cloud-native threats, particularly cryptomining attacks, which are increasingly becoming a preferred method for attackers due to their low-effort, high-reward nature.

Reflecting on the 2022 Cloud-Native Threat Report, we observe a noteworthy trend. The Sysdig Threat Research team profiled TeamTNT, a notorious cloud-native threat actor known for targeting both cloud and container environments, predominantly for crypto-mining purposes. Their research underlines a startling economic imbalance: cryptojacking costs victims an astonishing $53 for every $1 an attacker earns from stolen resources. This disparity highlights the financial implications of such attacks, beyond the apparent security breaches.

TeamTNT’s approach reiterates why attackers are choosing to exploit environments where container resource limits are undefined or unmonitored. The lack of constraints or oversight of resource usage in containers creates an open field for attackers to deploy cryptojacking malware, leveraging the unmonitored resources for financial gain at the expense of its victim.

In light of these insights, it becomes evident that the implementation of resource constraints in Kubernetes and the monitoring of resource usage in Kubernetes are not just best practices for security and operational efficiency; they are essential defenses against a growing trend of financially draining cryptomining attacks. As Kubernetes continues to evolve, the importance of these practices only escalates. Organizations must proactively adapt by setting appropriate resource limits and establishing vigilant monitoring systems, ensuring a secure, efficient, and financially sound environment in the face of such insidious threats.

Unlock Cloud Security Insights

Subscribe to our newsletter for the latest expert trends and updates

Related Articles:

Token Sprawl in the Age of AI

Published: 02/18/2026

The First Question Security Should Ask on AI Projects

Published: 01/09/2026

.png)