Using ChatGPT for Cloud Security Audits

Published 06/08/2023

Written by Ashwin Chaudhary, CEO, Accedere.

ChatGPT is an artificial intelligence chatbot developed by Open AI and released in November 2022. The GPT (Generative Pre-trained Transformer) series of language models, including GPT-3, is a stateof-the-art technology developed by Open AI for natural language processing.

These models are trained on vast amounts of text data and can generate human-like responses to text inputs. One of the most significant abilities of ChatGPT is their ability to understand and generate human-like responses, making them ideal for use in conversational interfaces such as customer service, education, and healthcare. They can also be used to automate repetitive tasks, improve efficiency, and provide real-time insights into data analysis.

Despite the many benefits of ChatGPT chatbots, there are also concerns about their potential misuse, particularly for generating fake news or other malicious content. As a result, Open AI has limited access to the model to select partners and researchers.

Limitations of ChatGPT:

- Limited Expertise

- Lack of Common Sense

- Bias

- Security Risks

- Overreliance on Technology

- Lack of Emotional Intelligence

It is important to be aware of these limitations and ensure that it is used in conjunction with human expertise and oversight.

Current Usage of ChatGPT in Cloud Environment:

It's difficult to give a precise percentage as the usage of ChatGPT in cloud environments can vary depending on various factors such as the business needs, the size of the organization, and the resources available for implementing the system.

According to a recent report by MarketsandMarkets, the global natural language processing (NLP) market, which includes chatbots like ChatGPT, is expected to grow from $10.2 billion in 2019 to $26.4 billion by 2024, at a compound annual growth rate (CAGR) of 21.0% during the forecast period. This growth is expected to be driven by the increasing adoption of NLP-based solutions in various industries, including healthcare, BFSI, retail, and others.

Moreover, many cloud providers, such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), offer pre-built NLP models and APIs, making it easier for organizations to integrate ChatGPT into their cloud environments. This has further accelerated the adoption of ChatGPT in cloud environments.

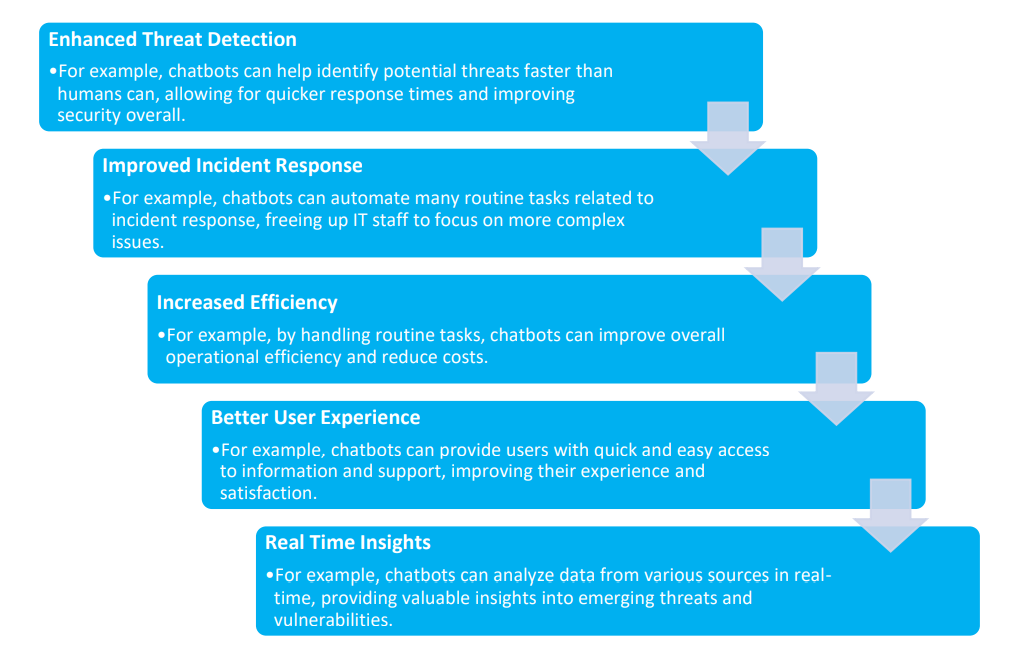

The Positive impact of using ChatGPT in Cloud Environment:

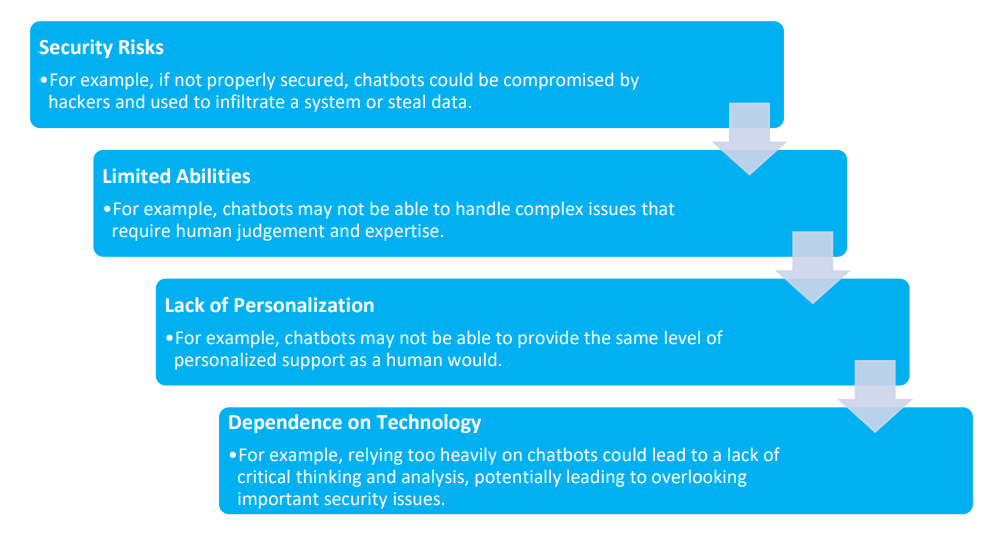

The Negative impact of using ChatGPT in Cloud Environment:

ChatGPT for Security Audits:

Using ChatGPT for security audits can have both positive and negative impacts.

Positive impacts:

- ChatGPT can provide a quick and efficient way to gather information during security audits.

- It can be programmed to ask relevant questions about security controls, configurations, and policies.

- It can also analyze responses given by auditees to detect inconsistencies or potential risks.

- ChatGPT can enhance the accuracy of security audits because it can process large amounts of data quickly and efficiently, reducing human errors that might occur with traditional auditing methods.

- Additionally, ChatGPT is available 24/7, so security audits can be conducted anytime, even outside business hours.

Negative impacts:

- The use of ChatGPT in security audits can result in false positives or false negatives if not programmed properly.

- For example, it may not be able to detect certain types of security threats or vulnerabilities, or it may misunderstand the meaning of certain responses.

- ChatGPT may not be able to provide the level of insight and analysis that a human auditor can provide.

- There are also concerns about the security and confidentiality of information collected through ChatGPT.

- If the software is not properly secured, sensitive information could be compromised, leading to significant privacy and security risks.

- ChatGPT cannot be used for Offensive Security. This means that Penetration Testing still needs to be conducted manually.

- ChatGPT may also not be able to provide the latest updates on Standards and Frameworks such as the CCM4 or ISO/IEC 27001:2022.

Therefore, it is important to ensure that proper measures are in place when using ChatGPT in security audits.

About the Author

Ashwin Chaudhary is the CEO of Accedere. He is a CPA from Colorado, MBA, CITP, CISA, CISM, CGEIT, CRISC, CISSP, CDPSE, CCSK, PMP, ISO27001 LA, ITILv3 certified cybersecurity professional with about 20 years of cybersecurity/privacy and 40 years of industry experience. He has managed many cybersecurity projects covering SOC reporting, Privacy, IoT, Governance Risk, and Compliance.

Related Resources

Unlock Cloud Security Insights

Subscribe to our newsletter for the latest expert trends and updates

Related Articles:

How AI is Simplifying Multi-Framework Cloud Compliance for CSA STAR Assessments

Published: 03/06/2026

Building a Declarative Governance Framework for the Agentic Era

Published: 03/05/2026

How Attackers Are Weaponizing AI to Create a New Generation of Ransomware

Published: 03/04/2026

.jpeg)

.jpg)