How the Sys:All Loophole Allowed Us to Penetrate GKE Clusters in Production

Published 04/05/2024

Originally published by Orca Security.

Written by Ofir Yakobi.

Following our discovery of a critical loophole in Google Kubernetes Engine (GKE) dubbed Sys:All, we decided to conduct research into the real-world impacts of this issue. Our initial probe already revealed over a thousand vulnerable GKE clusters due to admins configuring RBAC bindings making the system:authenticated group overprivileged, which could potentially allow any Google account holder to access and control these clusters.

GKE, unlike other major Kubernetes services offered by CSPs such as AWS and Azure, defaults to using standard IAM for cluster authentication and authorization. This approach enables some access to the Kubernetes API server using any Google credentials, thereby including all Google users, including those outside of the organization, in GKE’s system:authenticated group. Since the scope of this group is easily misunderstood, administrators can unknowingly assign too many privileges and leave the GKE cluster wide open.

In this article, we delve into how widespread this issue actually is. Through a series of scans on publicly available GKE clusters, we uncovered a spectrum of data exposures with real-world consequences for numerous organizations. We will discuss the nature of these exposures and the range of sensitive information that could be compromised. Our story will show tangible examples of exploitation paths, and give practical recommendations for securing GKE clusters against these threats.

Executive Summary:

- We discovered numerous organizations with significant misconfigurations of their system:authenticated groups across various GKE clusters, that make them vulnerable to the Sys:All loophole discovered by Orca.

- These misconfigurations led to the exposure of various sensitive data types, including JWT tokens, GCP API keys, AWS keys, Google OAuth credentials, and private keys.

- A notable example involved a publicly traded company where this misconfiguration resulted in extensive unauthorized access, potentially leading to system-wide security breaches.

- This study highlights the critical need for stringent security protocols in cloud environments to prevent similar occurrences.

- A Threat Briefing detailing how an attacker could abuse this GKE security loophole, as well as recommendations on how to protect your clusters, will be held on January 26th at 11 am Pacific Time.

Technical Exploitation Overview

Our research embarked on a journey to assess how many GKE clusters were exposed to the Sys:All loophole, inspecting clusters from a known CIDR range. We specifically targeted clusters that had custom roles assigned to the system:authenticated group. Our scans identified over a thousand clusters with varying degrees of exposure due to these custom role assignments.

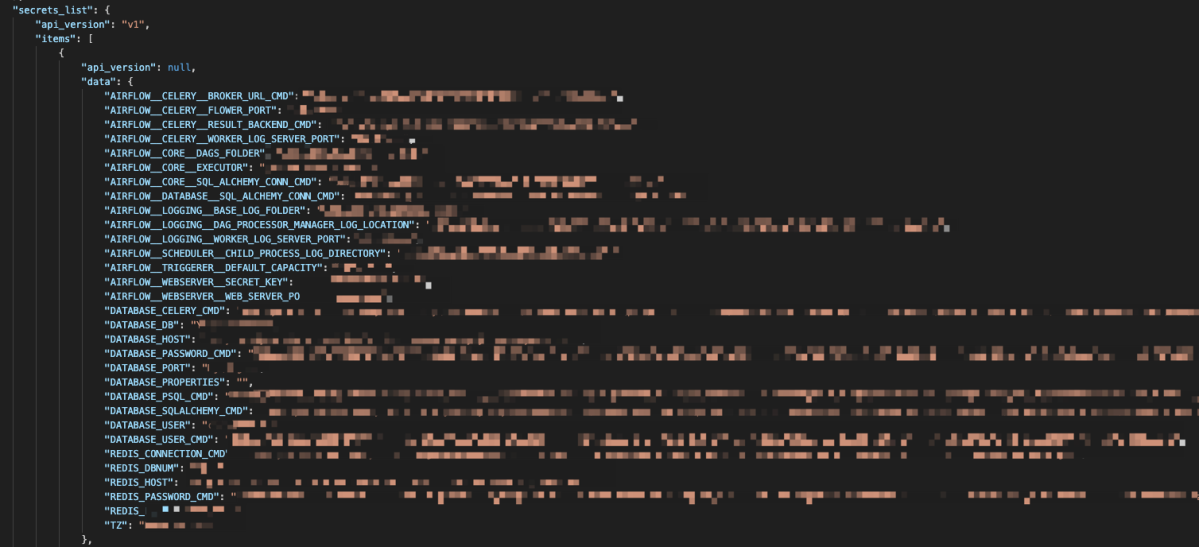

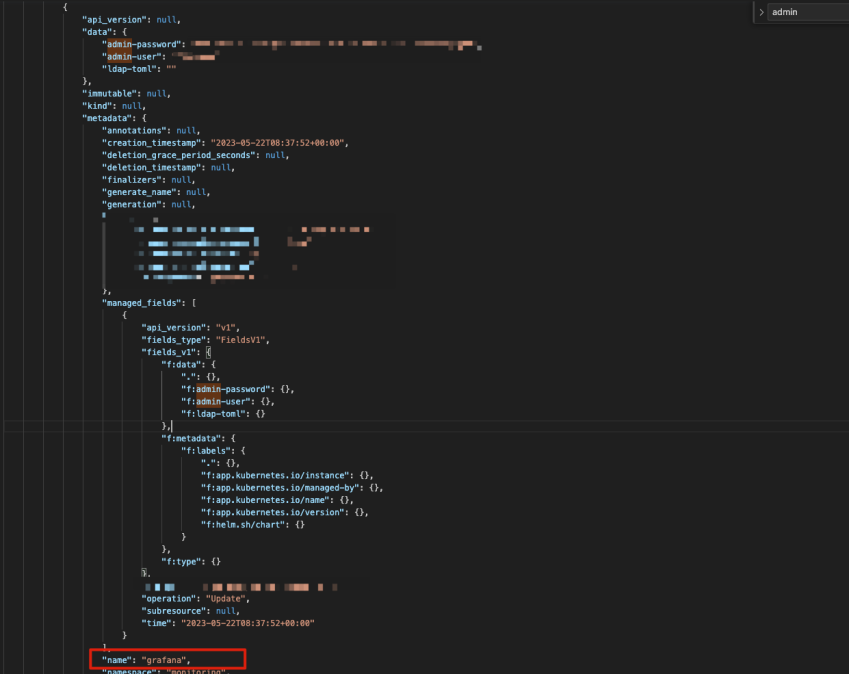

To probe these clusters, we developed a python script that utilized a generic Google authentication token (obtained through the OAuth 2.0 Playground), accessible to any Google user. The script was designed to interact with the Kubernetes API of these clusters, aiming to extract a wealth of potentially sensitive information. We targeted data points such as configuration maps (configmaps), Kubernetes secrets, service account details, and other critical operational data. Furthermore, our approach included attempts to associate these clusters with their respective organizations, thus uncovering the broader impact of these misconfigurations and their owners.

We then ran a secret-detector on the retrieved data to identify and match known secret patterns and regexes that could allow further lateral movement within the organization’s environment.

This part was crucial in understanding the real implications of these security misconfigurations, particularly in the context of potential exploitation by unauthorized entities. Through this comprehensive technical examination, we gained deepened insights into the prevalence and severity of security shortcomings within these GKE clusters.

How We Accessed GKE Clusters of a NASDAQ Listed Company

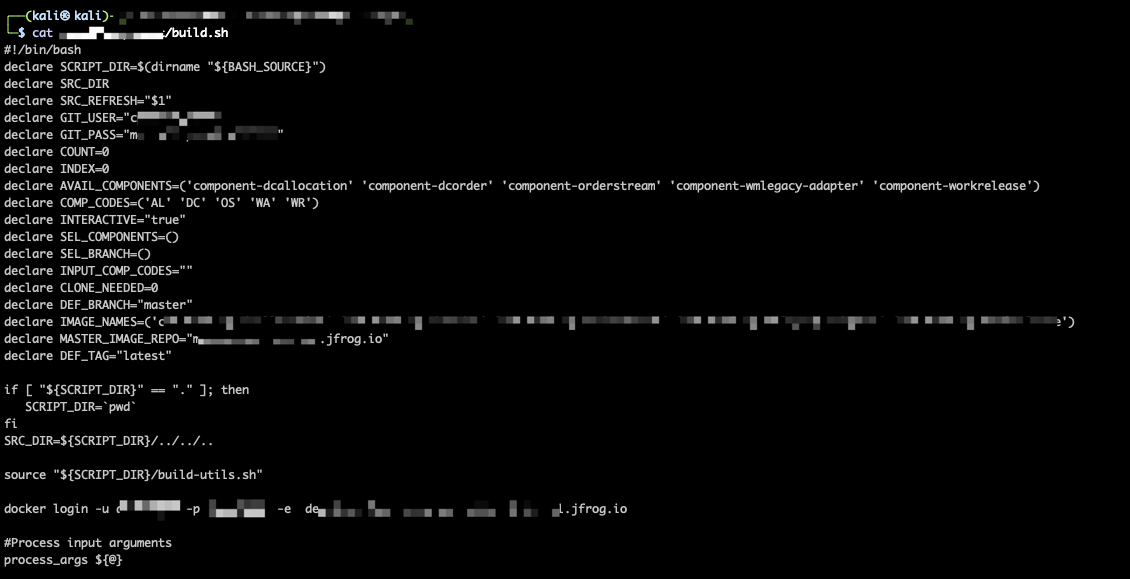

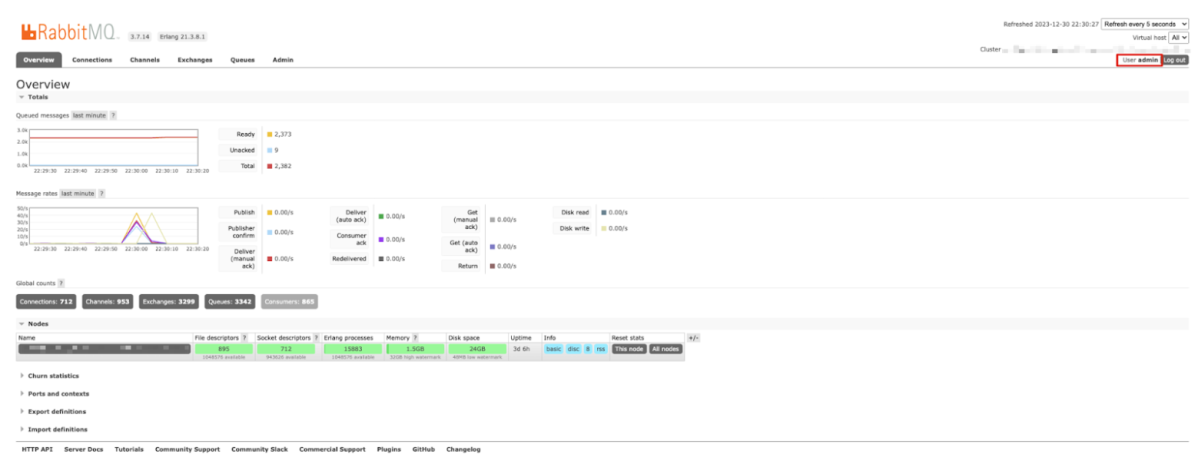

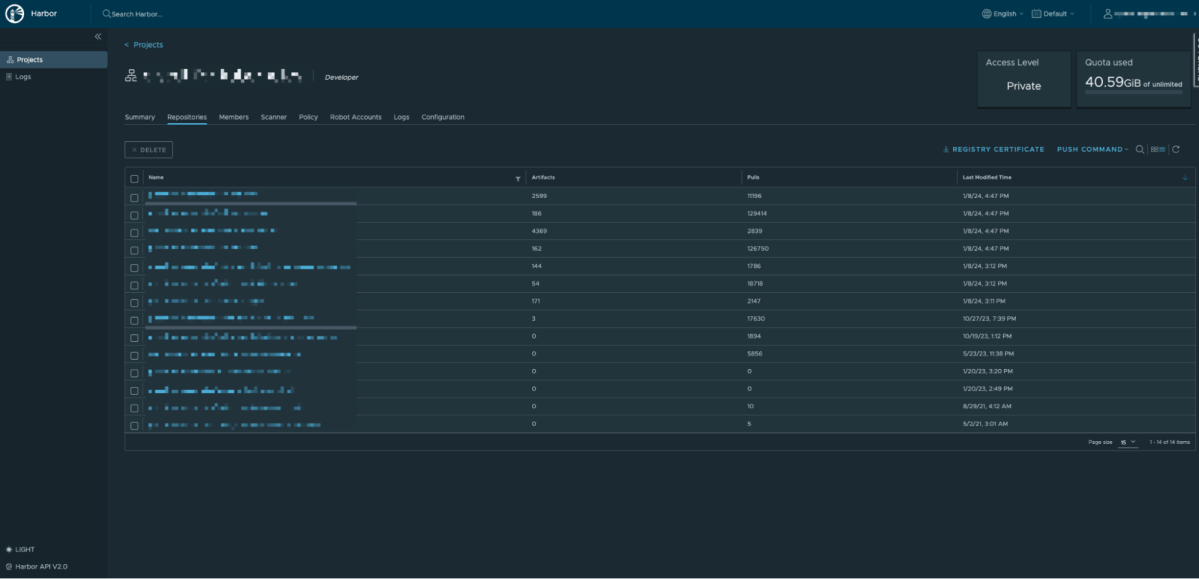

Our investigations led us to a stark discovery of a NASDAQ listed company’s exploitable GKE clusters. A seemingly innocuous misconfiguration in the system:authenticated group had far-reaching implications, such as allowing list and pull images from the company’s container registries and providing open access to AWS credentials stored within a cluster’s configmap (alongside other sensitive data found). With these credentials, we gained access to S3 buckets containing multiple sensitive information and logs that, upon further analysis, revealed system admin credentials and multiple valuable endpoints including RabbitMQ, Elastic, authentication server and internal system – all with administrator access.

Here’s a step-by-step account of how this misconfiguration enabled us to move laterally within the company’s digital infrastructure:

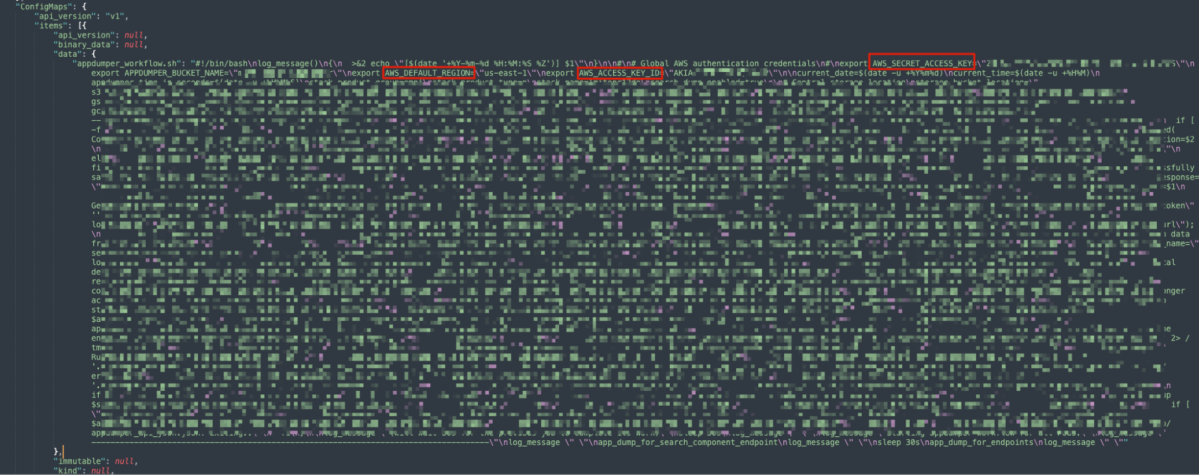

1. Initial Access: The misconfigured GKE clusters allowed cluster admin permissions to the system:authenticated group, allowing us (with any Google user account) to query multiple valuable resources using the Kubernetes API, including the ConfigMap resources and investigate it.

It is important to note that Google blocks the binding of the system:authenticated group to the cluster-admin role in newer GKE versions (1.28 and up). We would like to emphasize that even though this is an improvement, it still leaves many other roles and permissions (other than cluster-admin) that can be assigned to the system:authenticated group.

3. Bucket Content Examination: Using the exposed AWS credentials, we could list and download the contents of several S3 buckets. Among these were log files with detailed operational data.

4. Sensitive Information Discovery: The logs contained administrator credentials for various systems, including an internal platform used by their customers. Critically, URLs to important internal services such as ElasticSearch and RabbitMQ were also found, accompanied by superuser privileges.

After responsibly disclosing these findings to the affected company, we collaborated with them to address the vulnerabilities. This involved tightening IAM roles and permissions, securing S3 buckets, and implementing better practices around ConfigMaps. As the secrets were embedded within bash scripts as part of the Kubernetes configmaps, we advised and assisted in establishing better practices. This involved removing sensitive data from scripts, using more secure methods for managing secrets, and ensuring that configmaps were not accessible to unauthorized users.

By addressing these areas, the company was able to significantly reduce the risk of similar vulnerabilities in the future, enhancing the overall security of their cloud infrastructure.

Findings from Other Exposed GKE Clusters

In our broader more general examination of GKE clusters, we uncovered a variety of sensitive data exposure across multiple organizations, highlighting the extensive nature of these issues:

- Exposure of GCP API Keys and Service Account JSONs: We frequently came across GCP API keys and service account authentication JSON files left exposed. These elements are crucial for accessing GCP resources, and their exposure represents a significant security threat.

- Discovery of Private Keys: Our scans also revealed private keys within these clusters. Such keys are essential for securing communications and data access, making their exposure a major security risk.

- Access to Container Registries: We found numerous instances where credentials for various container registries were accessible. This allowed us to pull and run container images locally, a capability that could be abused to introduce malicious elements into containerized applications.

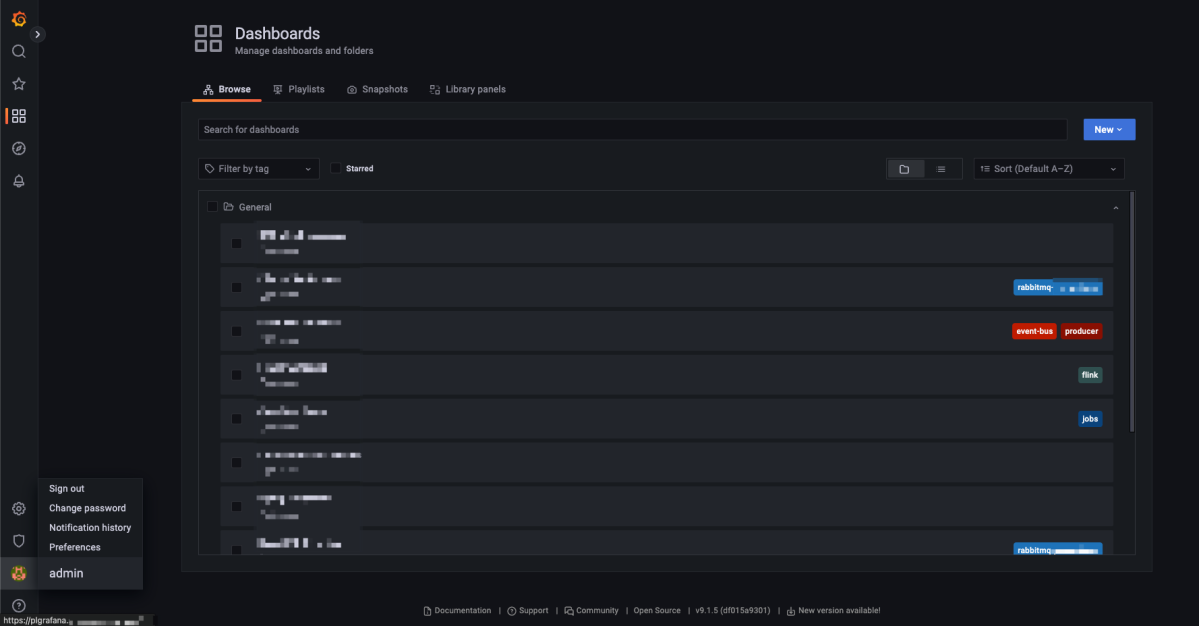

- Access to Critical Services: Our findings included unauthorized access to Grafana dashboards, RabbitMQ message brokers, and ElasticSearch clusters in different organizations. Each of these services play a critical role in operational monitoring, messaging, and data management, respectively. Gaining access to these services could lead to significant data breaches and operational disruptions.

The cumulative findings from our research painted a concerning picture of the widespread nature of security lapses in cloud environments. From critical access keys to operational data and infrastructure oversight, the diversity and depth of the data exposed underscore the urgent need for robust security measures and continuous monitoring in cloud environments.

Recommendations

This story is a real-world testament to the importance of rigorous security configurations. For GKE users, it’s vital to review cluster permissions, especially default groups such as system:authenticated. Organizations must ensure that only necessary permissions are granted following the Principle of Least Privilege (PolP), and that regular audits are conducted to prevent such oversights.

Google has blocked the binding of the system:authenticated group to the cluster-admin role in newer GKE versions (version 1.28 and up). However, it’s important to note that this still leaves many other roles and permissions that can be assigned to the group. This means that in addition to upgrading to GKE version 1.28 or higher, the main way to block this attack vector is to strictly follow the principle of least privilege.

Unlock Cloud Security Insights

Subscribe to our newsletter for the latest expert trends and updates

Related Articles:

How CSA STAR Helps Cloud-First Organizations Tackle Modern Identity Security Risks

Published: 02/13/2026

.jpeg)