How we ended up with #log4shell aka CVE-2021-44228

Blog Article Published: 01/10/2022

Quick note: from now on I will refer to log4j version 2 as “log4j2”

To learn how to deal with the critical vulnerability in log4j2, read the first blog in this series, Dealing with log4shell. To get a breakdown of the timeline of events, refer to the second blog, Keeping up with log4shell.

So how did we end up in this #log4shell situation?

#log4shell is actually two intertwined problems:

- The vulnerability in log4j

- The widespread usage of log4j, especially in ways that are not amenable to easy updates

There are lots of widely used OpenSource software packages, many of which have had similarly severe vulnerabilities, so why haven’t they resulted in #log4shell style situations?

Feature, speed, and agility first. Security, documentation, and safety are an afterthought.

Most software development has historically focussed on features, simply put: you need the software to do something useful, or why else put time and effort into building it at all? How we build software has evolved (specifications, waterfall, user stories, etc.) but the fundamental truth is “we need this software to do something useful” and I don’t think that will ever change.

This results in an interesting situation with a lot of OpenSource software: if someone wants a feature and campaigns hard enough, especially if they write the feature, they can often get devs to accept the code and get the feature they want. This is great for code and feature velocity, and one of the big reasons OpenSource won. But it can result in code being accepted that hasn’t been fully thought out or understood and may contain security vulnerabilities. One unique aspect of security vulnerabilities is especially troublesome here: they don’t stand out until someone really goes looking for them. Normal bugs like “this is the wrong color” or “when I put in a leap year the numbers come out negative” show up, but by definition most security vulnerabilities don’t show up unless someone explicitly triggers them.

Even the people that care deeply about security get this wrong

A perfect example of this kind of failure was CVE-2016-0777 (assigned by yours truly), to quote the OpenSSH changelog:

* SECURITY: ssh(1): The OpenSSH client code between 5.4 and 7.1 contains experimential support for resuming SSH-connections (roaming). The matching server code has never been shipped, but the client code was enabled by default and could be tricked by a malicious server into leaking client memory to the server, including private client user keys.

In other words, an entity pushed for a feature, got the client side code added, then never added the server side code to support it. The client side code sat, unused but active, until someone discovered the vulnerability in it. The OpenSSH solution was to disable it by default and then remove the unsupported code.

Just make it work!

This leads to the second major cultural problem with most software: features are enabled by default, even if most people will never use them or know they exist. Again, from the developer point of view this makes sense, and for most features it’s not a problem. Until it is a problem, and the entire world has to scramble to disable something so that they don’t get attacked and compromised. If every single feature had to be enabled, you would see a ton of support requests “why doesn’t X work?” “because you need to enable it” and it would result in a massive amount of friction to use the software in question. There are some very difficult questions here which I can’t answer, things like “how widely is this feature used?” and “how dangerous is this feature likely to be?”

Make it work with other stuff!

Here we arrive at a third major problem: software is not an island. Most software interacts with other software and protocols (where else would it get and send the data it is processing?). Quite often a “simple” software component will interact with one or more complicated other software packages or protocols, like HTTP/HTTPS, or in the case of log4j, JNDI (Java Naming and Directory Interface). I tried to find a copy of the JNDI SPI and API specifications, but all the links are to dead sites (e.g. fto.javasoft.com). I was going to say “look at how complicated this specification is, interacting with it safely is very difficult”, but in light of the specifications no longer being easily found, I’m going to say “how are you supposed to interact safely with something that you can’t even find the documentation for from official sources?”

There are numerous other problems but I’m going to cover a fourth one and then summarize the rest. The fourth one is as simple as it is complicated: old software is harder to update. This is likely due to survivor bias: if it was easy to update, it would have been updated. And regarding the increasing cost of technical debt, by definition you are more likely to be able to afford a minimal effort to keep the software running vs. the cost of rewriting or replacing it with something new. There are other aspects as well: do you have anyone that understands the software? Is there institutional knowledge embedded in the software and can it be extracted? And so on. These all add to the risk of replacing something that works with something that does not work.

A short summary of the common problems:

- Inability to know how widely a library or piece of software is used

- Inability to know how widely used a feature is

- Complexity and danger in adding support for other software/protocols

- Defaulting features to on

- Lack of any documentation for features

- Lack of context in the documentation (this feature causes network traffic, trusts network responses, etc.)

- Refusal or hesitancy to break backwards compatibility, even if it is just changing a default to off and giving people a switch to turn it on

- Adding features vs. adding features fully (e.g. threat modelling, documentation, engaging with the community of users fully)

- In the OpenSource world the majority of users will never talk to you, even when things blow up

- Old code is by definition harder to update, if it was easy to update you wouldn’t have the old code floating around

Everything you buy/build/deploy has an operational maintenance cost, even if you fully ignore it, at some point it will require effort to replace or get rid of.

Conclusion

I’m not sure that the balance of “feature first” vs. “safety first” is actually that problematic right now. While incidents such as log4shell definitely cause pain, they aren’t the end of the world. I suspect part of the challenge is that the cost of security problems is largely externalized from the developers and projects writing and shipping the software, in fact most licenses explicitly disclaim any liability. In the case of OpenSource, where you are free to use it or not use it, it would be hard to make an argument for liability. I think it’s important to remember that free OpenSource is like receiving a free puppy, not a free beer.

Addendum

As I wrote this, a further two CVEs came out, nowhere near as bad as the originals, but one is due to the configuration file supporting JavaScript execution within it (seriously, config files should not contain executed code/scripts directly…) and the other is just a denial-of-service when processing self referential lookups (things should probably have timeouts by default and be more careful about trusting user input blindly).

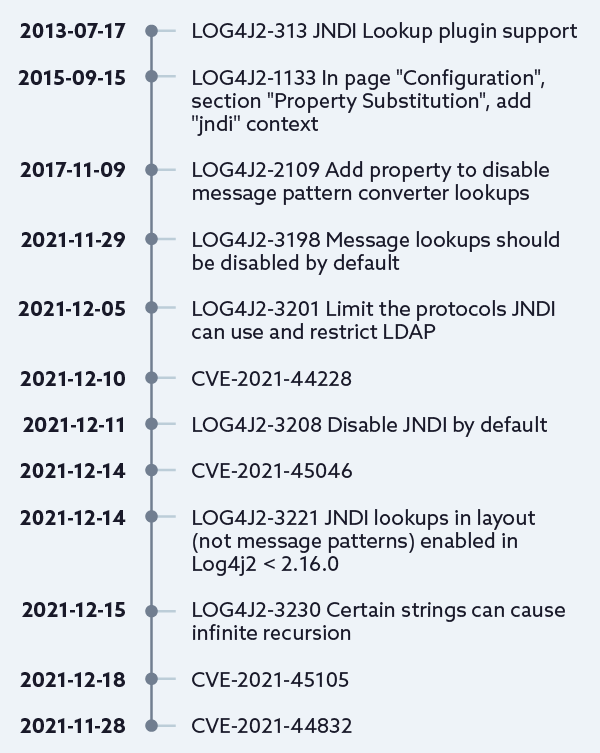

Timeline

Trending This Week

#1 The 5 SOC 2 Trust Services Criteria Explained

#2 What You Need to Know About the Daixin Team Ransomware Group

#3 Mitigating Security Risks in Retrieval Augmented Generation (RAG) LLM Applications

#4 Cybersecurity 101: 10 Types of Cyber Attacks to Know

#5 Detecting and Mitigating NTLM Relay Attacks Targeting Microsoft Domain Controllers

Related Articles:

Navigating the XZ Utils Vulnerability (CVE-2024-3094): A Comprehensive Guide

Published: 04/25/2024

Do You Know These 7 Terms About Cyber Threats and Vulnerabilities?

Published: 04/19/2024

10 Tips to Guide Your Cloud Email Security Strategy

Published: 04/17/2024