Automated Cloud Remediation – Empty Hype, Viable Strategy, or Something in Between?

Published 05/17/2024

Originally published by Tamnoon.

Written by Idan Perez, CTO, Tamnoon.

What role does automation play in cloud remediation? Will it replace or simply augment the role of security and R&D teams?

Over 60% of the world’s corporate data now resides in the cloud, and securing this environment has become a daunting task. The vast attack surface and countless potential misconfigurations pose significant challenges for security and operations teams. Amidst this complexity, automation has emerged as a potential solution, with the rise of AI further fueling interest in automated remediation.

Many vendors tout automated remediation as the silver bullet for cloud security woes. However, based on our conversations with frontline practitioners, we believe the reality is more nuanced. In this article, we’ll explore the current state of automated cloud remediation and try to separate hype from reality.

What Exactly Are We Automating?

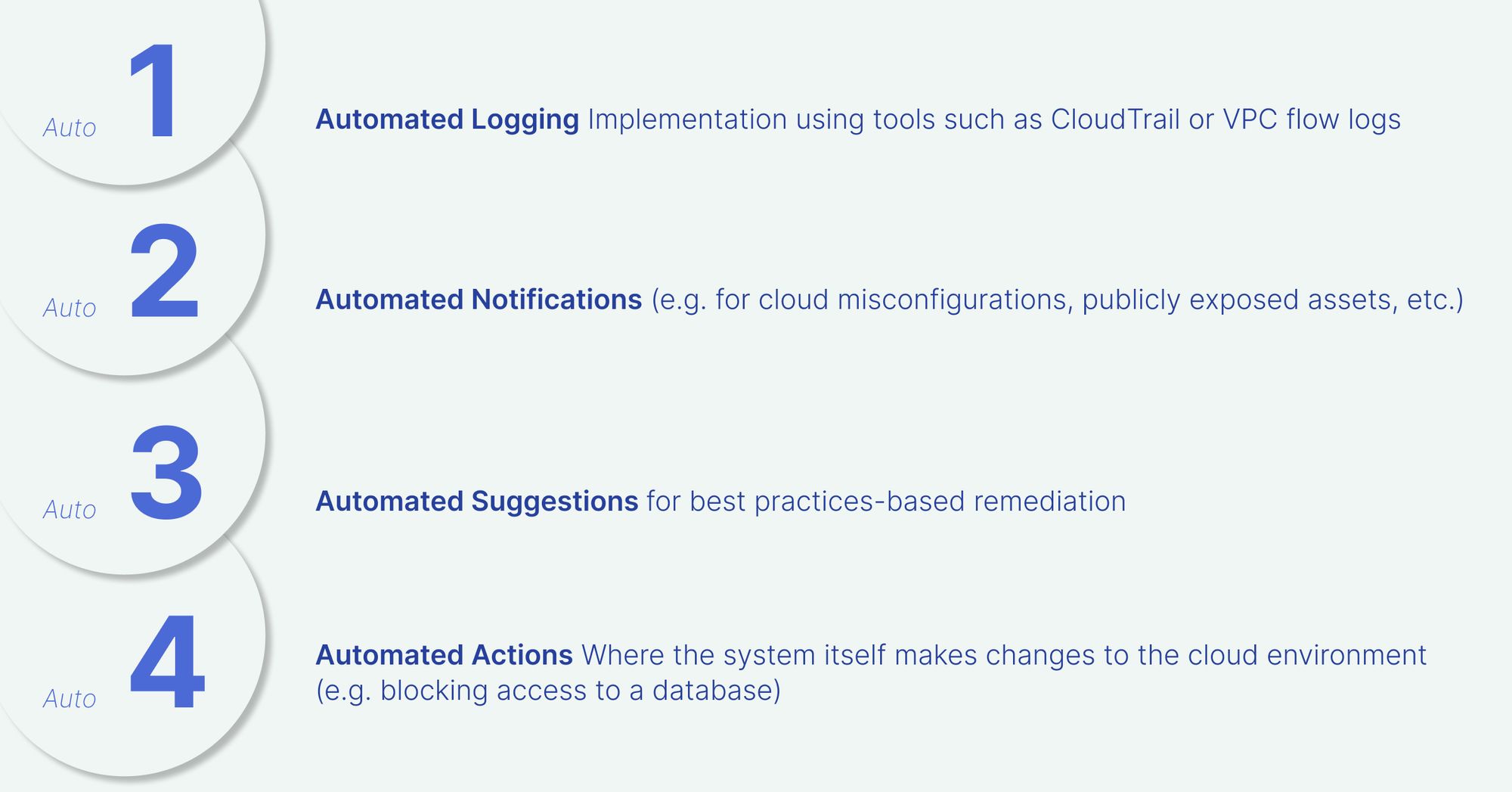

The first question we need to answer is what we mean when we talk about automation. There’s a broad spectrum between ‘fully manual’ and ‘hands-free.’ Here are some cloud security actions that we automate today:

Levels 1-3 would raise few objections among enterprise decision-makers. But it’s the 4th level that seems to both captivate imaginations as well as raise the most concerns.

Are we ready for a world where security is entirely handled by automated code, taking humans out of the equation? Are we ready for self-driving security? Our working assumption – which has been validated time and again in working with enterprises of all shapes and sizes – is that the answer is basically “not yet.”

When Good Automation Goes Bad

While the promise of automated remediation is alluring, the reality is that poorly implemented automation can do more harm than good. In their eagerness to adopt the latest innovations, organizations often learn the hard way that modifying production infrastructure without proper testing and oversight can lead to broken applications and lost revenue.

In our work with clients, we’ve seen organizations forced to choose between two undesirable outcomes: break production or leave systems vulnerable. Real-world examples illustrate the complexity of this area and the potential impact or overreliance on automation:

- Dangerous loops: The complexity of cloud environments can lead to clashes between different automated detection and response tools. In one case we encountered, a CloudFormation template was deployed with a security group that allowed all IPv4 traffic. An automated tool promptly detected this misconfiguration and removed the overly permissive rule. However, CloudFormation’s drift detection feature noticed the discrepancy between the running configuration and the template definition, and dutifully reinstated the insecure rule. This cycle repeated for an entire weekend before being noticed and resolved.

- Configuration changes made by an auto-remediation script can lead to unforeseen outages of production resources, leaving customers unable to connect or log in. In one high-profile incident, Meta attributed a major Facebook outage in October 2021 to a “faulty configuration change.” While the exact cause was not disclosed, it’s worth noting that Facebook has relied on automated remediation systems like FBAR (Facebook Auto-Remediation) since 2011.

Automation is most viable when remediation is limited to things that can be easily codified and operates with context that can be fully derived from a query. If this is not the case, and the data provided to the automation is wrong, the automation will make a poor decision – often in subtle and difficult-to-detect ways, resulting in a loss of control and highly problematic unintended consequences.

Isn’t it all just IaC nowadays?

Proponents of fully automated remediation often point to the rise of infrastructure-as-code (IaC) tools, arguing that many issues can be caught and fixed in the code review stage without the need for human intervention. However, this view overlooks a few challenges:

- IaC adoption isn’t universal: over two-thirds of resources in AWS, for example, are still deployed using APIs, SDKs, or the console rather than using IaC tools.

- Even in an IaC-driven world, automation is not a panacea. One common issue is “IaC drift,” where the actual state of the infrastructure diverges from the desired state defined in the code. This drift introduces unknowns and elevated risks, which can lead to the same sorts of unintended consequences we’ve discussed.

- IaC is only as good as the processes and practices around it. If an organization has poor change management practices or if developers are not following best practices for IaC development, then the benefits of IaC can be quickly undermined. For example, production immutability is a critical concept in DevSecOps: we don’t make changes to production; instead, we make changes to the code that builds production. which undergoes rigorous testing before being deployed. However, when manual changes are made outside of the relevant tools, they bypass all of these well-intentioned processes.

Automation For Me But Not for Thee

Another point to remember is that it’s not just systems that are connected – it’s people.

Cloud security is an ecosystem that is comprised of diverse stakeholders, often with competing interests: developers, security teams, DevOps, and specific business units. This means that attempts to fix misconfigurations can sometimes backfire, breaking production environments or driving up costs for others in the organization. We call this phenomenon “remediation anxiety” – the fear that remediating one issue might inadvertently cause a cascade of new problems. This puts cybersecurity practitioners at risk of violating their own version of the Hippocratic Oath to “first, do no harm.”

For example, an automated tool might detect an open security group and automatically close it without realizing that a business-critical application depends on that access. Suddenly, the application stops working, and the team is left scrambling to figure out what went wrong.

Unexpected consequences and potential downtime make teams hesitant to fully automate remediation.

Fixing cloud security issues requires coordination between multiple teams – we call this the “cloud security remediation tango,” where the security team identifies issues, and the R&D team implements fixes.

Introducing unsupervised automation into this delicate dance is perceived differently by different groups: The security team may welcome automated remediation as a way to cope with the overwhelming volume of threats and a faster way to remediate issues; while the R&D team may worry that unsupervised automation could cause unpredictable issues in production. The latter group is often measured on uptime and will be much more sensitive to fixes that can endanger app availability.

One of the biggest allures of the cloud is the idea that it’s “always available” without having to manage infrastructure – which allows companies to bring features to market quickly, reduce costs, and shift spending from CapEx to OpEx. Organizations are highly wary of introducing tools that can disrupt this vision and lead to unexpected failures.

These divergent viewpoints create a push-pull dynamic around automation adoption. Ultimately, the decision to automate must be made collaboratively – it cannot be decreed by security leaders, even if it does create some potential efficiencies in their workflows.

Doesn’t AI Solve This?

In 2024, it’s impossible to talk about automation without talking about AI. Advances in this area show much initial promise; but while AI can help identify potential misconfigurations and vulnerabilities more efficiently, it still struggles with the nuances and context that are essential for effective remediation.

AI systems are often opaque, making it difficult to understand how they arrive at their recommendations. This lack of transparency can lead to mistrust and hesitation in implementing AI-driven remediation actions. When users are not sure about the “black box,” they will never trust it to execute changes and will hardly trust the information it provides.

Understanding AI conclusions and reasoning is a challenging mission, almost like solving the problem itself. It has its own technical domain and frameworks, referred to as explainable AI. The loss of trust after even one bad experience (false positive, false negative, misinterpretation, etc.) can be difficult to recover from.

Other areas that need to be considered:

- Cost efficiency: The cloud environment is dynamic, resulting in high training rates and associated costs.

- Context and AI awareness: contextual data and information that is context-specific is essential to optimize AI within the critical organizational context. Unfortunately, not all knowledge and data are documented, leaving gaps in AI awareness.

- Statistical bias and inaccuracies: AI continues to struggle with statistical bias entering the system through algorithms and training data. AI may misidentify benign behavior or miss risk. In cloud security, a single mistake can result in damage, massive costs, and lost trust.

Embrace Automation but Keep Humans in the Loop

While few are ready to go all-in on automated cloud remediation – whether AI-driven or otherwise – this does not mean that organizations must resign themselves to manually chasing down every last vulnerability.

Our guiding principle when automating remediation is to build copilots, not autopilots. While the hand that pulls the switch needs to stay human, there is ample room for automation that streamlines processes, provides valuable context, and removes repetitive busywork. The rise of AI presents a valuable opportunity to create systems that help humans be more effective in managing cloud security threats.

The fact is, today’s IT infrastructures are complex, interconnected, and absolutely critical to business operations. Decisions about whether to block a resource or allow a deviation from best practices require careful consideration of the broader business context. Automation can inform and support these decisions, but it cannot replace human judgment. Accepting the limitations of automation will help the industry focus on the type of solutions organizations can trust today which keeps humans firmly in the loop.

Automation does have a vital role to play as an enabler of human decision-making rather than a substitute for it. Machine learning and AI have the potential to significantly reduce the burden on human operators by helping to prioritize and contextualize incidents, suggesting remediation pathways, or streamlining manual processes such as ticket creation. We are confident that there is still much to explore in this direction – and that the end result will allow organizations to strike a better balance between security and product.

Related Resources

Unlock Cloud Security Insights

Subscribe to our newsletter for the latest expert trends and updates

Related Articles:

Core Collapse

Published: 02/26/2026

Agentic AI and the New Reality of Financial Security

Published: 02/26/2026

AI Security: When Authorization Outlives Intent

Published: 02/25/2026

The Visibility Gap in Autonomous AI Agents

Published: 02/24/2026

.png)

.jpeg)